biopipen.ns.tcr

Tools to analyze single-cell TCR sequencing data

ImmunarchLoading(Proc) — Immuarch - Loading data</>ImmunarchFilter(Proc) — Immunarch - Filter data</>Immunarch(Proc) — Exploration of Single-cell and Bulk T-cell/Antibody Immune Repertoires</>SampleDiversity(Proc) — Sample diversity and rarefaction analysis</>CloneResidency(Proc) — Identification of clone residency</>Immunarch2VDJtools(Proc) — Convert immuarch format into VDJtools input formats.</>ImmunarchSplitIdents(Proc) — Split the data into multiple immunarch datasets by Idents from Seurat</>VJUsage(Proc) — Circos-style V-J usage plot displaying the frequency ofvarious V-J junctions using vdjtools. </>Attach2Seurat(Proc) — Attach the clonal information to a Seurat object as metadata</>CDR3Clustering(Proc) — Cluster the TCR/BCR clones by their CDR3 sequences</>TCRClusterStats(Proc) — Statistics of TCR clusters, generated byTCRClustering.</>CloneSizeQQPlot(Proc) — QQ plot of the clone sizes</>CDR3AAPhyschem(Proc) — CDR3 AA physicochemical feature analysis</>TESSA(Proc) — Tessa is a Bayesian model to integrate T cell receptor (TCR) sequenceprofiling with transcriptomes of T cells. </>TCRDock(Proc) — Using TCRDock to predict the structure of MHC-peptide-TCR complexes</>ScRepLoading(Proc) — Load the single cell TCR/BCR data into ascRepertoirecompatible object</>ScRepCombiningExpression(Proc) — Combine the scTCR/BCR data with the expression data</>ClonalStats(Proc) — Visualize the clonal information.</>

biopipen.ns.tcr.ImmunarchLoading(*args, **kwds) → Proc

Immuarch - Loading data

Load the raw data into immunarch object,

using immunarch::repLoad().

For the data path specified at TCRData in the input file, we will first find

filtered_contig_annotations.csv and filtered_config_annotations.csv.gz in the

path. If neighter of them exists, we will find all_contig_annotations.csv and

all_contig_annotations.csv.gz in the path and a warning will be raised

(You can find it at ./.pipen/<pipeline-name>/ImmunarchLoading/0/job.stderr).

If none of the files exists, an error will be raised.

This process will also generate a text file with the information for each cell.

The file will be saved at

./.pipen/<pipeline-name>/ImmunarchLoading/0/output/<prefix>.tcr.txt.

The file can be used by the SeuratMetadataMutater process to integrate the

TCR-seq data into the Seurat object for further integrative analysis.

envs.metacols can be used to specify the columns to be exported to the text file.

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneously.The program entrance for some schedulers may take too much resources when submitting a job or checking the job status. So we may use a smaller number here to limit the simultaneous submissions.template— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

metafile— The meta data of the samplesA tab-delimited file Two columns are required:- *

Sampleto specify the sample names. - *

TCRDatato assign the path of the data to the samples,

filtered_contig_annotations.csv, which doesn't have any name information.- *

metatxt— The meta data at cell level, which can be used to attach to the Seurat objectrdsfile— The RDS file with the data and metadata, which can be processed byotherimmunarchfunctions.

extracols(list) — The extra columns to be exported to the text file.You can refer to the immunarch documentation to get a sense for the full list of the columns. The columns may vary depending on the data source. The columns fromimmdata$metaand some core columns, includingBarcode,CDR3.aa,Clones,Proportion,V.name,J.name, andD.namewill be exported by default. You can use this option to specify the extra columns to be exported.mode— Either "single" for single chain data or "paired" forpaired chain data. Forsingle, only TRB chain will be kept atimmdata$data, information for other chains will be saved atimmdata$traandimmdata$multi.prefix— The prefix to the barcodes. You can use placeholder like{Sample}_to use the meta data from theimmunarchobject. The prefixed barcodes will be saved inout.metatxt. Theimmunarchobject keeps the original barcodes, but the prefix is saved atimmdata$prefix.

/// Note This option is useful because the barcodes for the cells from scRNA-seq data are usually prefixed with the sample name, for example,Sample1_AAACCTGAGAAGGCTA-1. However, the barcodes for the cells from scTCR-seq data are usually not prefixed with the sample name, for example,AAACCTGAGAAGGCTA-1. So we need to add the prefix to the barcodes for the scTCR-seq data, and it is easier for us to integrate the data from different sources later. ///tmpdir— The temporary directory to link all data files.Immunarchscans a directory to find the data files. If the data files are not in the same directory, we can link them to a temporary directory and pass the temporary directory toImmunarch. This option is useful when the data files are in different directories.

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>log(level,msg,*args,logger)— Log message for the process</>run()— Init all other properties and jobs</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously.

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

run()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

biopipen.ns.tcr.ImmunarchFilter(*args, **kwds) → Proc

Immunarch - Filter data

See https://immunarch.com/articles/web_only/repFilter_v3.html

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneously.The program entrance for some schedulers may take too much resources when submitting a job or checking the job status. So we may use a smaller number here to limit the simultaneous submissions.template— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

filterfile— A config file in TOML.A dict of configurations with keys as the names of the group and values dicts with following keys. Seeenvs.filtersimmdata— The data loaded byimmunarch::repLoad()

groupfile— Also a group file with rownames as cells and column names aseach of the keys inin.filterfileorenvs.filters. The values will be subkeys of the dicts inin.filterfileorenvs.filters.outfile— The filteredimmdata

filters— The filters to filter the dataYou can have multiple cases (groups), the names will be the keys of this dict, values are also dicts with keys the methods supported byimmunarch::repFilter(). There is one more methodby.countsupported to filter the count matrix. Forby.meta,by.repertoire,by.rep,by.clonotypeorby.colthe values will be passed to.queryofrepFilter(). You can also use the helper functions provided byimmunarch, includingmorethan,lessthan,include,excludeandinterval. If these functions are not used,include(value)will be used by default. Forby.count, the value offilterwill be passed todplyr::filter()to filter the count matrix. You can also specifyORDERto define the filtration order, which defaults to 0, higherORDERgets later executed. Each subkey/subgroup must be exclusive For example:

{ "name": "BM_Post_Clones", "filters" { "Top_20": { "SAVE": True, # Save the filtered data to immdata "by.meta": {"Source": "BM", "Status": "Post"}, "by.count": { "ORDER": 1, "filter": "TOTAL %%in%% TOTAL[1:20]" } }, "Rest": { "by.meta": {"Source": "BM", "Status": "Post"}, "by.count": { "ORDER": 1, "filter": "!TOTAL %%in%% TOTAL[1:20]" } } }

metacols— The extra columns to be exported to the group file.prefix— The prefix will be added to the cells in the output filePlaceholders like{Sample}_can be used to from the meta data

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>log(level,msg,*args,logger)— Log message for the process</>run()— Init all other properties and jobs</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously.

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

run()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

biopipen.ns.tcr.Immunarch(*args, **kwds) → Proc

Exploration of Single-cell and Bulk T-cell/Antibody Immune Repertoires

See https://immunarch.com/articles/web_only/v3_basic_analysis.html

After ImmunarchLoading loads the raw data into an immunarch object,

this process wraps the functions from immunarch to do the following:

- Basic statistics, provided by

immunarch::repExplore, such as number of clones or distributions of lengths and counts. - The clonality of repertoires, provided by

immunarch::repClonality - The repertoire overlap, provided by

immunarch::repOverlap - The repertoire overlap, including different clustering procedures and PCA, provided by

immunarch::repOverlapAnalysis - The distributions of V or J genes, provided by

immunarch::geneUsage - The diversity of repertoires, provided by

immunarch::repDiversity - The dynamics of repertoires across time points/samples, provided by

immunarch::trackClonotypes - The spectratype of clonotypes, provided by

immunarch::spectratype - The distributions of kmers and sequence profiles, provided by

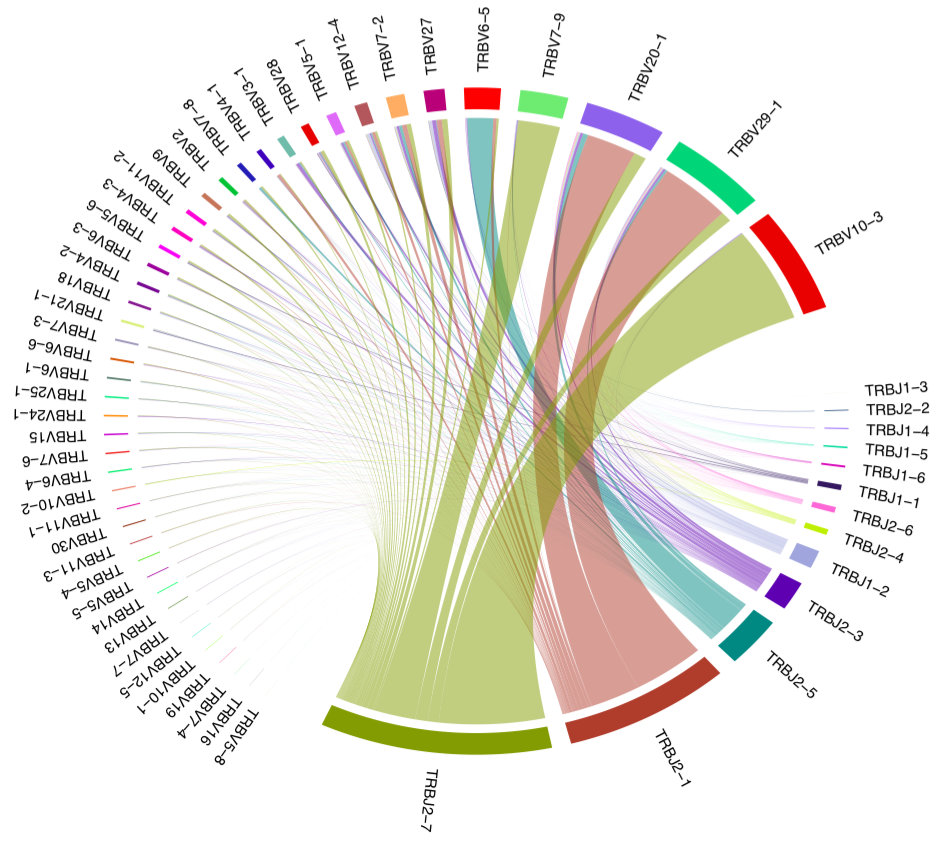

immunarch::getKmers - The V-J junction circos plots, implemented within the script of this process.

Environment Variable Design:

With different sets of arguments, a single function of the above can perform different tasks.

For example, repExplore can be used to get the statistics of the size of the repertoire,

the statistics of the length of the CDR3 region, or the statistics of the number of

the clonotypes. Other than that, you can also have different ways to visualize the results,

by passing different arguments to the immunarch::vis function.

For example, you can pass .by to vis to visualize the results of repExplore by different groups.

Before we explain each environment variable in details in the next section, we will give some examples here to show how the environment variables are organized in order for a single function to perform different tasks.

```toml

# Repertoire overlapping

[Immunarch.envs.overlaps]

# The method to calculate the overlap, passed to `repOverlap`

method = "public"

```

What if we want to calculate the overlap by different methods at the same time? We can use the following configuration:

```toml

[Immunarch.envs.overlaps.cases]

Public = { method = "public" }

Jaccard = { method = "jaccard" }

```

Then, the `repOverlap` function will be called twice, once with `method = "public"` and once with `method = "jaccard"`. We can also use different arguments to visualize the results. These arguments will be passed to the `vis` function:

```toml

[Immunarch.envs.overlaps.cases.Public]

method = "public"

vis_args = { "-plot": "heatmap2" }

[Immunarch.envs.overlaps.cases.Jaccard]

method = "jaccard"

vis_args = { "-plot": "heatmap2" }

```

`-plot` will be translated to `.plot` and then passed to `vis`.

If multiple cases share the same arguments, we can use the following configuration:

```toml

[Immunarch.envs.overlaps]

vis_args = { "-plot": "heatmap2" }

[Immunarch.envs.overlaps.cases]

Public = { method = "public" }

Jaccard = { method = "jaccard" }

```

For some results, there are futher analysis that can be performed. For example, for the repertoire overlap, we can perform clustering and PCA (see also <https://immunarch.com/articles/web_only/v4_overlap.html>):

```R

imm_ov1 <- repOverlap(immdata$data, .method = "public", .verbose = F)

repOverlapAnalysis(imm_ov1, "mds") %>% vis()

repOverlapAnalysis(imm_ov1, "tsne") %>% vis()

```

In such a case, we can use the following configuration:

```toml

[Immunarch.envs.overlaps]

method = "public"

[Immunarch.envs.overlaps.analyses.cases]

MDS = { "-method": "mds" }

TSNE = { "-method": "tsne" }

```

Then, the `repOverlapAnalysis` function will be called twice on the result generated by `repOverlap(immdata$data, .method = "public")`, once with `.method = "mds"` and once with `.method = "tsne"`. We can also use different arguments to visualize the results. These arguments will be passed to the `vis` function:

```toml

[Immunarch.envs.overlaps]

method = "public"

[Immunarch.envs.overlaps.analyses]

# See: <https://immunarch.com/reference/vis.immunr_hclust.html>

vis_args = { "-plot": "best" }

[Immunarch.envs.overlaps.analyses.cases]

MDS = { "-method": "mds" }

TSNE = { "-method": "tsne" }

```

Generally, you don't need to specify `cases` if you only have one case. A default case will be created for you. For multiple cases, the arguments at the same level as `cases` will be inherited by all cases.

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneously.The program entrance for some schedulers may take too much resources when submitting a job or checking the job status. So we may use a smaller number here to limit the simultaneous submissions.template— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

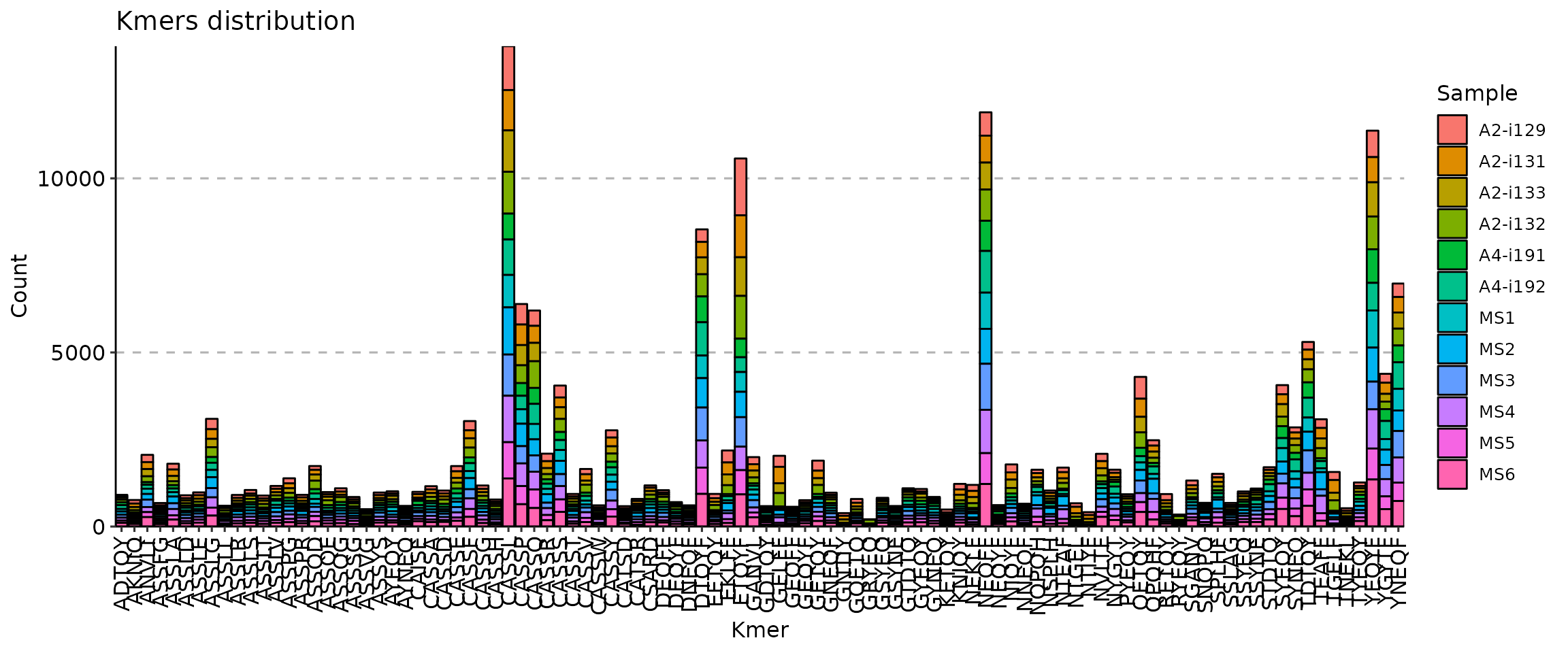

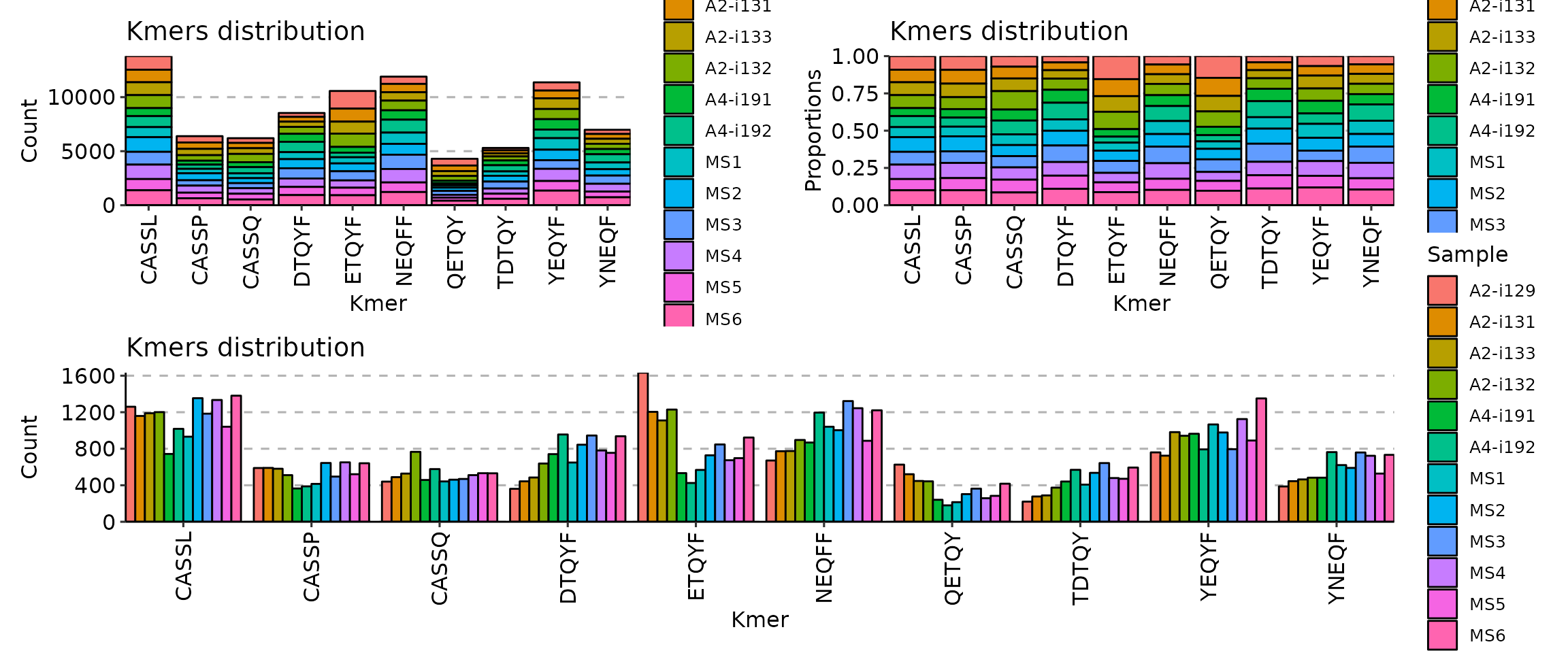

[Immunarch.envs.kmers]k = 5

[Immunarch.envs.kmers]

# Shared by cases

k = 5

[Immunarch.envs.kmers.cases]

Head5 = { head = 5, -position = "stack" }

Head10 = { head = 10, -position = "fill" }

Head30 = { head = 30, -position = "dodge" }

With motif profiling:

[Immunarch.envs.kmers]

k = 5

[Immnuarch.envs.kmers.profiles.cases]

TextPlot = { method = "self", vis_args = { "-plot": "text" } }

SeqPlot = { method = "self", vis_args = { "-plot": "seq" } }

immdata— The data loaded byimmunarch::repLoad()metafile— A cell-level metafile, where the first column must be the cell barcodesthat match the cell barcodes inimmdata. The other columns can be any metadata that you want to use for the analysis. The loaded metadata will be left-joined to the converted cell-level data fromimmdata. This can also be a Seurat object RDS file. If so, thesobj@meta.datawill be used as the metadata.

outdir— The output directory

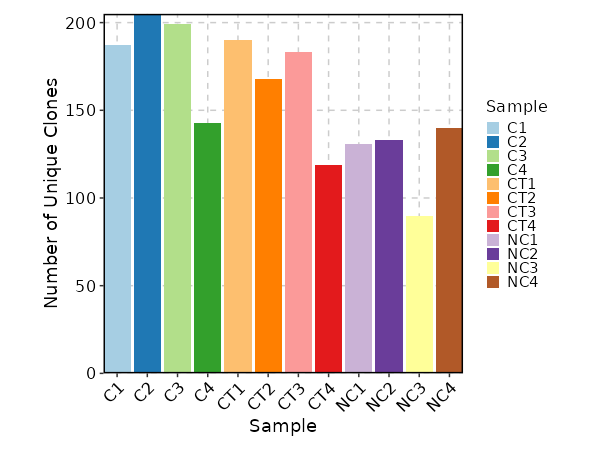

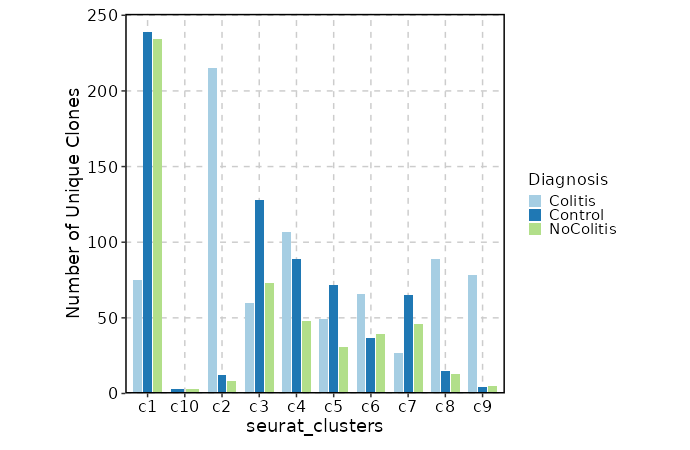

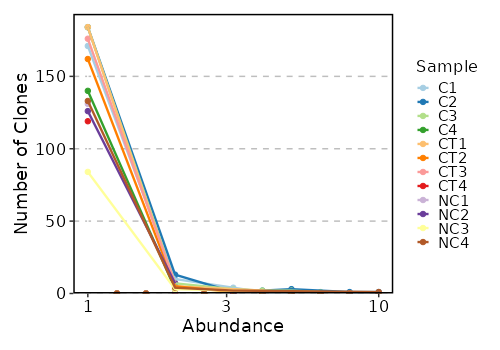

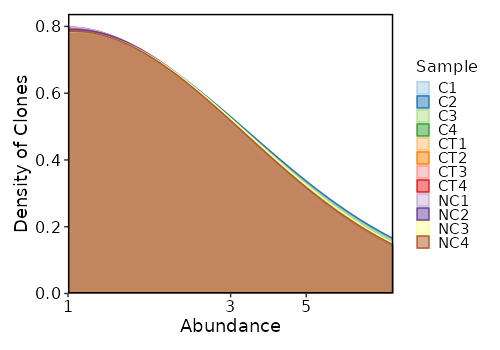

counts(ns) — Explore clonotype counts.- - by: Groupings when visualize clonotype counts, passed to the

.byargument ofvis(imm_count, .by = <values>).

Multiple columns should be separated by,. - - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot. - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.countswill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.counts.by,envs.counts.devpars.

- - by: Groupings when visualize clonotype counts, passed to the

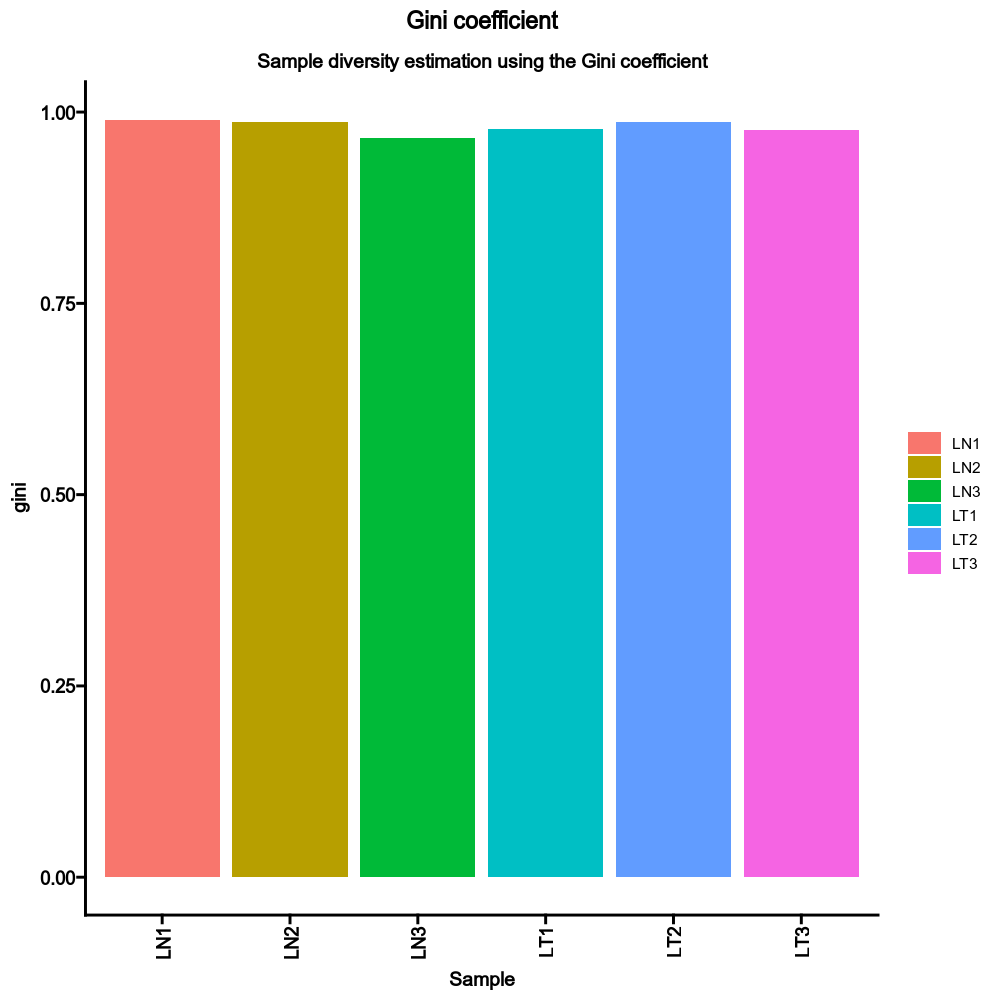

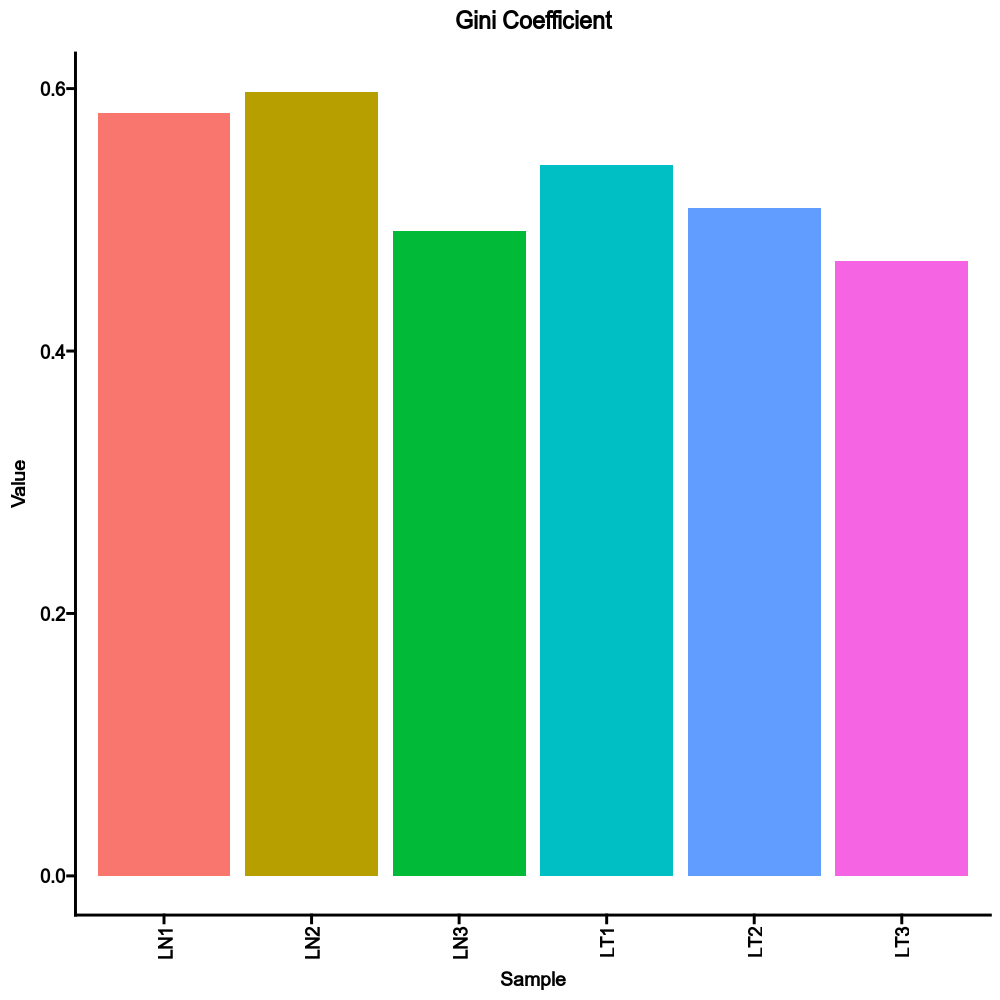

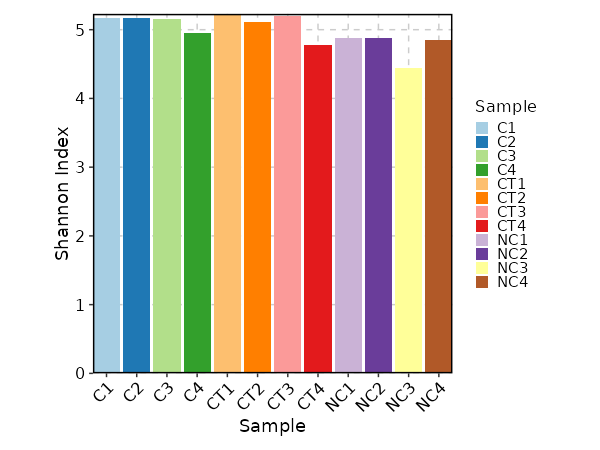

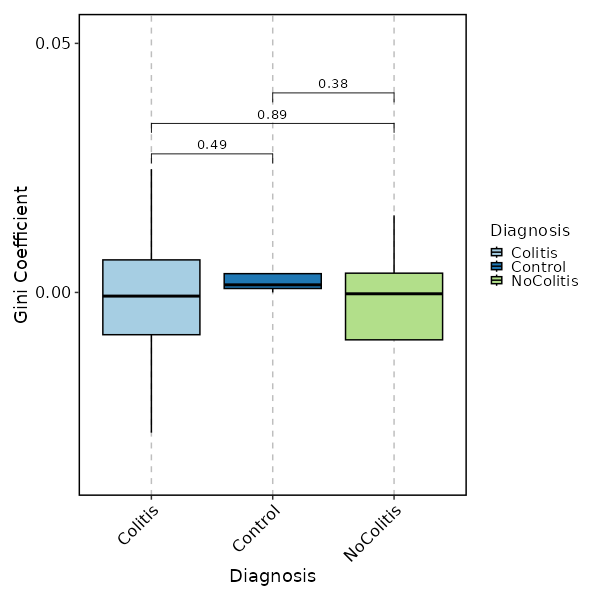

divs(ns) — Parameters to control the diversity analysis.- - method (choice): The method to calculate diversity.

- chao1: a nonparameteric asymptotic estimator of species richness.

(number of species in a population).

- hill: Hill numbers are a mathematically unified family of diversity indices.

(differing only by an exponent q).

- div: true diversity, or the effective number of types.

It refers to the number of equally abundant types needed for the average proportional abundance of the types to equal

that observed in the dataset of interest where all types may not be equally abundant.

- gini.simp: The Gini-Simpson index.

It is the probability of interspecific encounter, i.e., probability that two entities represent different types.

- inv.simp: Inverse Simpson index.

It is the effective number of types that is obtained when the weighted arithmetic mean is used to quantify

average proportional abundance of types in the dataset of interest.

- gini: The Gini coefficient.

It measures the inequality among values of a frequency distribution (for example levels of income).

A Gini coefficient of zero expresses perfect equality, where all values are the same (for example, where everyone has the same income).

A Gini coefficient of one (or 100 percents) expresses maximal inequality among values (for example where only one person has all the income).

- d50: The D50 index.

It is the number of types that are needed to cover 50%% of the total abundance.

- raref: Species richness from the results of sampling through extrapolation. - - by: The variables (column names) to group samples.

Multiple columns should be separated by,. - - plot_type (choice): The type of the plot, works when

byis specified.

Not working forraref.

- box: Boxplot

- bar: Barplot with error bars - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - args (type=json): Other arguments for

repDiversity().

Do not include the preceding.and use-instead of.in the argument names.

For example,do-normwill be compiled to.do.norm.

See all arguments at

https://immunarch.com/reference/repDiversity.html. - - order (list): The order of the values in

byon the x-axis of the plots.

If not specified, the values will be used as-is. - - test (ns): Perform statistical tests between each pair of groups.

Does NOT work forraref.

- method (choice): The method to perform the test

- none: No test

- t.test: Welch's t-test

- wilcox.test: Wilcoxon rank sum test

- padjust (choice): The method to adjust p-values.

Defaults tonone.

- bonferroni: one-step correction

- holm: step-down method using Bonferroni adjustments

- hochberg: step-up method (independent)

- hommel: closed method based on Simes tests (non-negative)

- BH: Benjamini & Hochberg (non-negative)

- BY: Benjamini & Yekutieli (negative)

- fdr: Benjamini & Hochberg (non-negative)

- none: no correction. - - separate_by: A column name used to separate the samples into different plots.

- - split_by: A column name used to split the samples into different subplots.

Likeseparate_by, but the plots will be put in the same figure.

y-axis will be shared, even ifalign_yisFalseorymin/ymaxare not specified.

ncolwill be ignored. - - split_order: The order of the values in

split_byon the x-axis of the plots.

It can also be used forseparate_byto control the order of the plots.

Values can be separated by,. - - align_x (flag): Align the x-axis of multiple plots. Only works for

raref. - - align_y (flag): Align the y-axis of multiple plots.

- - ymin (type=float): The minimum value of the y-axis.

The minimum value of the y-axis for plots splitting byseparate_by.

align_yis forcedTruewhen bothyminandymaxare specified. - - ymax (type=float): The maximum value of the y-axis.

The maximum value of the y-axis for plots splitting byseparate_by.

align_yis forcedTruewhen bothyminandymaxare specified.

Works when bothyminandymaxare specified. - - log (flag): Indicate whether we should plot with log-transformed x-axis using

vis(.log = TRUE). Only works forraref. - - ncol (type=int): The number of columns of the plots.

- - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the device

- height (type=int): The height of the device

- res (type=int): The resolution of the device - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be used as the names of the cases.

The values will be passed to the corresponding arguments above.

If NO cases are specified, the default case will be added, with the name ofenvs.div.method.

The values specified inenvs.divwill be used as the defaults for the cases here.

- - method (choice): The method to calculate diversity.

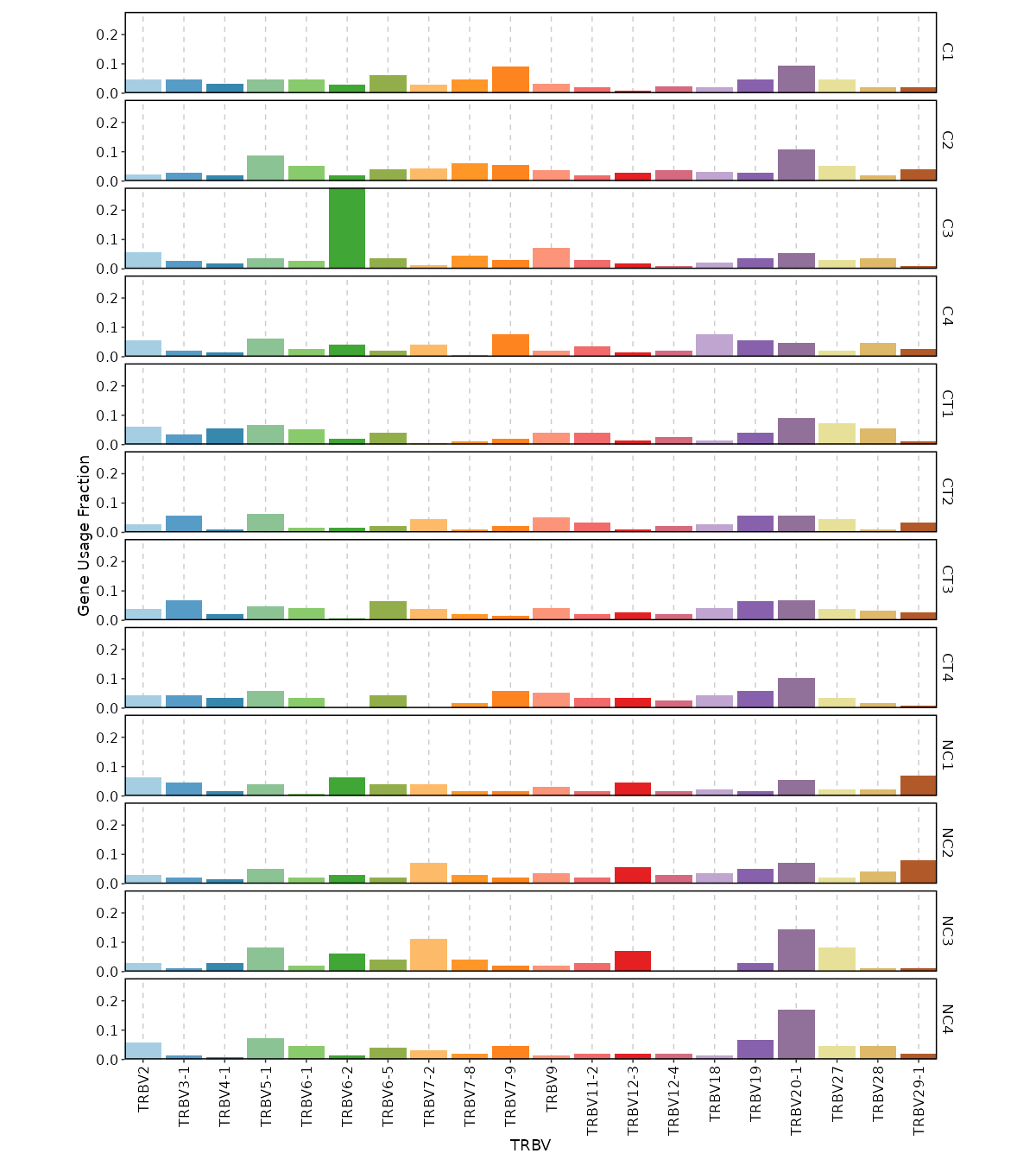

gene_usages(ns) — Explore gene usages.- - top (type=int): How many top (ranked by total usage across samples) genes to show in the plots.

Use0to use all genes. - - norm (flag): If True then use proportions of genes, else use counts of genes.

- - by: Groupings to show gene usages, passed to the

.byargument ofvis(imm_gu_top, .by = <values>).

Multiple columns should be separated by,. - - vis_args (type=json): Other arguments for the plotting functions.

- - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot. - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - analyses (ns;order=8): Perform gene usage analyses.

- method: The method to control how the data is going to be preprocessed and analysed.

One ofjs,cor,cosine,pca,mdsandtsne. Can also be combined with following methods

for the actual analyses:hclust,kmeans,dbscan, andkruskal. For example:cosine+hclust.

You can also set tononeto skip the analyses.

See https://immunarch.com/articles/web_only/v5_gene_usage.html.

- vis_args (type=json): Other arguments for the plotting functions.

- devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot.

- cases (type=json): If you have multiple cases, you can use this argument to specify them.

The keys will be the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.gene_usages.analyseswill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.gene_usages.analyses.method,envs.gene_usages.analyses.vis_argsandenvs.gene_usages.analyses.devpars. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be used as the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.gene_usageswill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.gene_usages.top,envs.gene_usages.norm,envs.gene_usages.by,envs.gene_usages.vis_args,envs.gene_usages.devparsandenvs.gene_usages.analyses.

- - top (type=int): How many top (ranked by total usage across samples) genes to show in the plots.

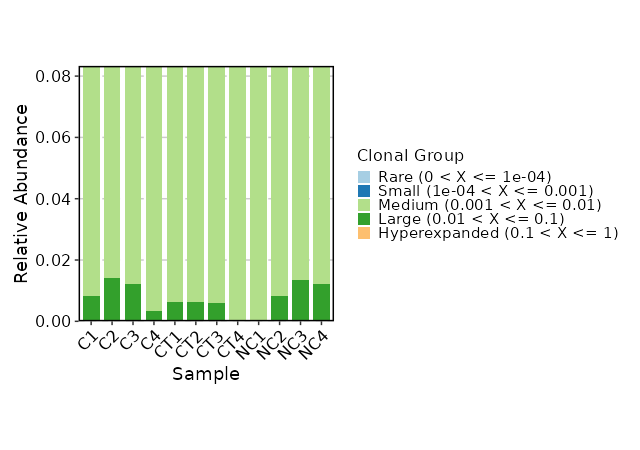

hom_clones(ns) — Explore homeo clonotypes.- - by: Groupings when visualize homeo clones, passed to the

.byargument ofvis(imm_hom, .by = <values>).

Multiple columns should be separated by,. - - marks (ns): A dict with the threshold of the half-closed intervals that mark off clonal groups.

Passed to the.clone.typesarguments ofrepClonoality().

The keys could be:

- Rare (type=float): the rare clonotypes

- Small (type=float): the small clonotypes

- Medium (type=float): the medium clonotypes

- Large (type=float): the large clonotypes

- Hyperexpanded (type=float): the hyperexpanded clonotypes - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.hom_cloneswill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.hom_clones.by,envs.hom_clones.marksandenvs.hom_clones.devpars.

- - by: Groupings when visualize homeo clones, passed to the

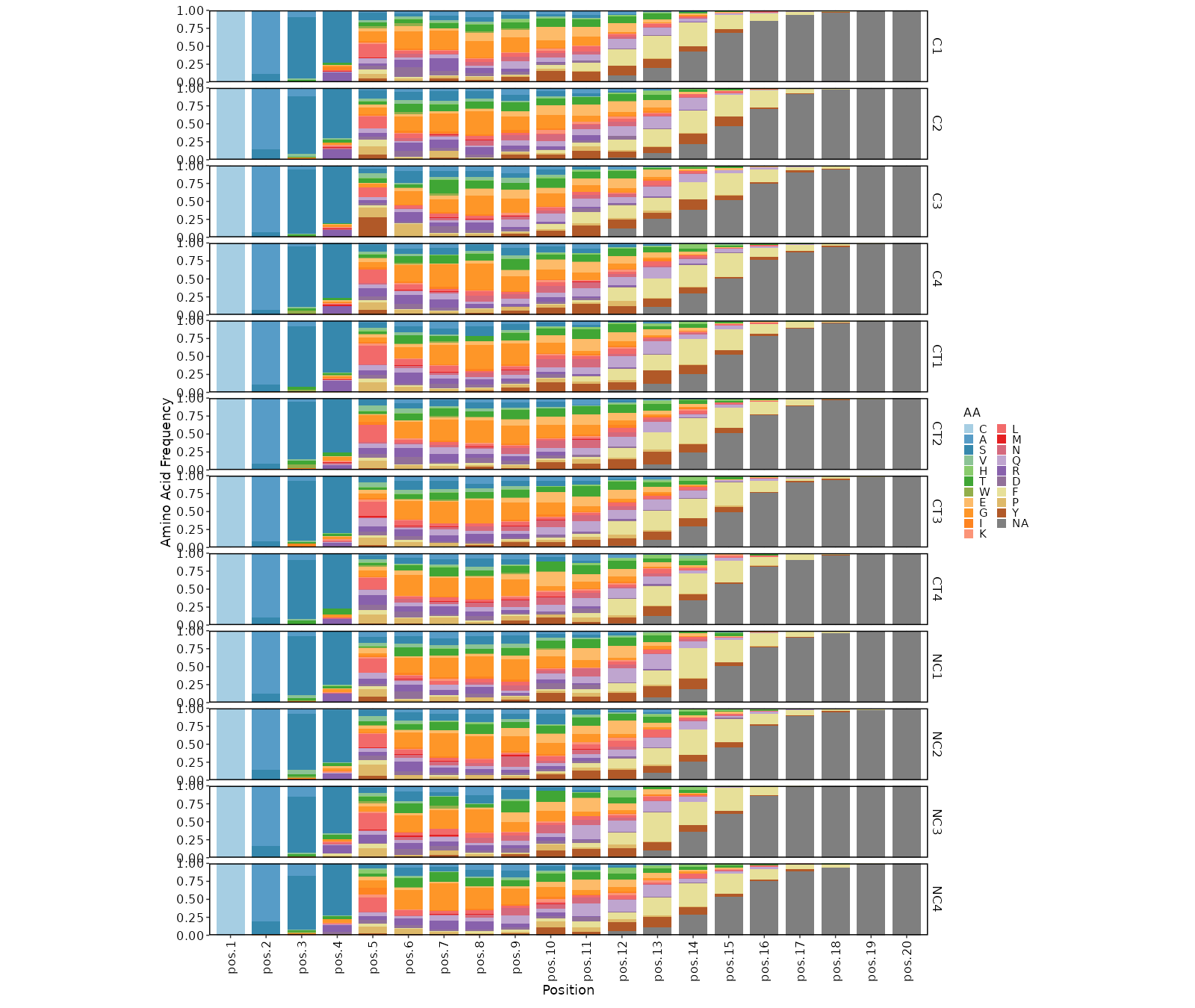

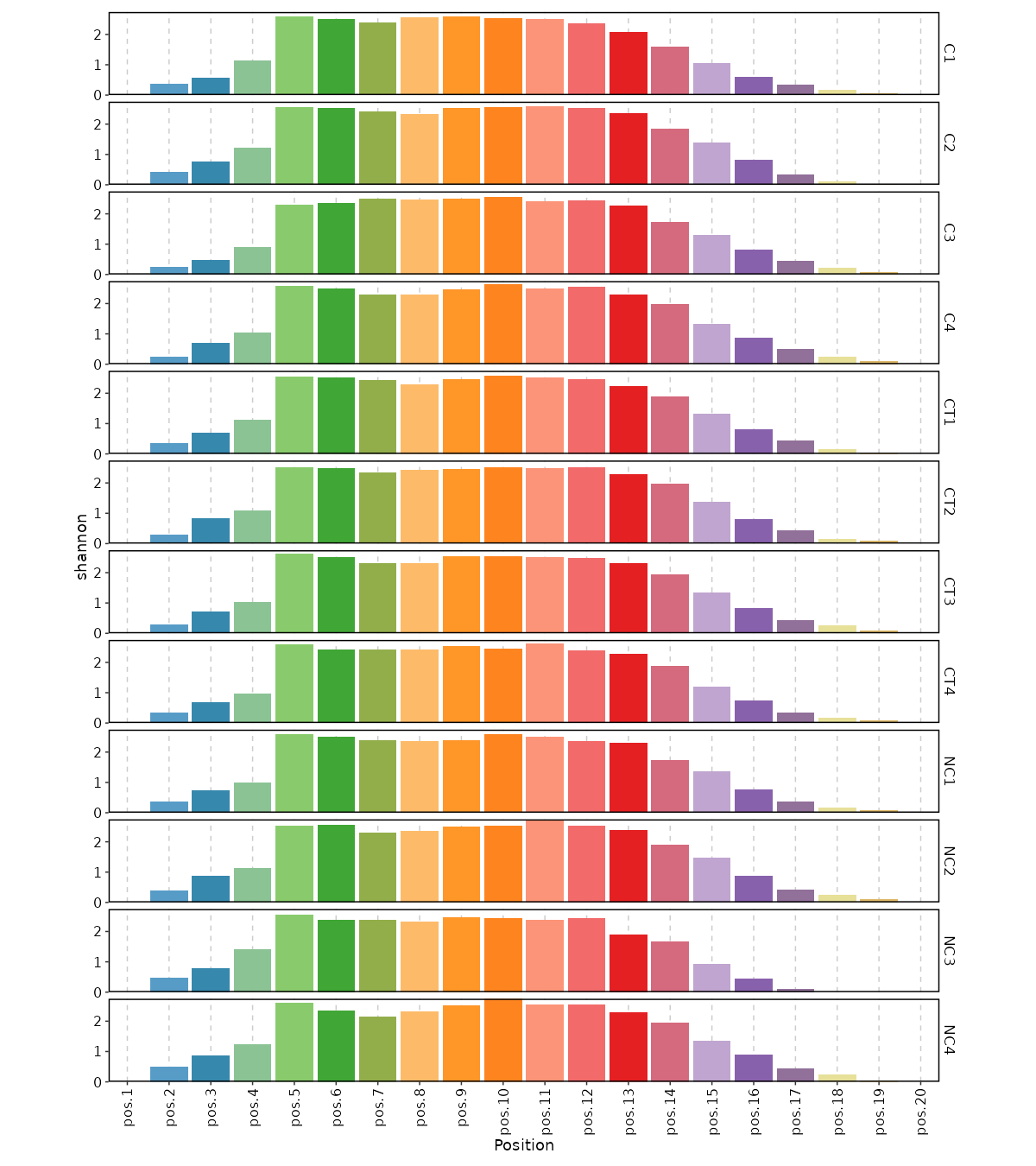

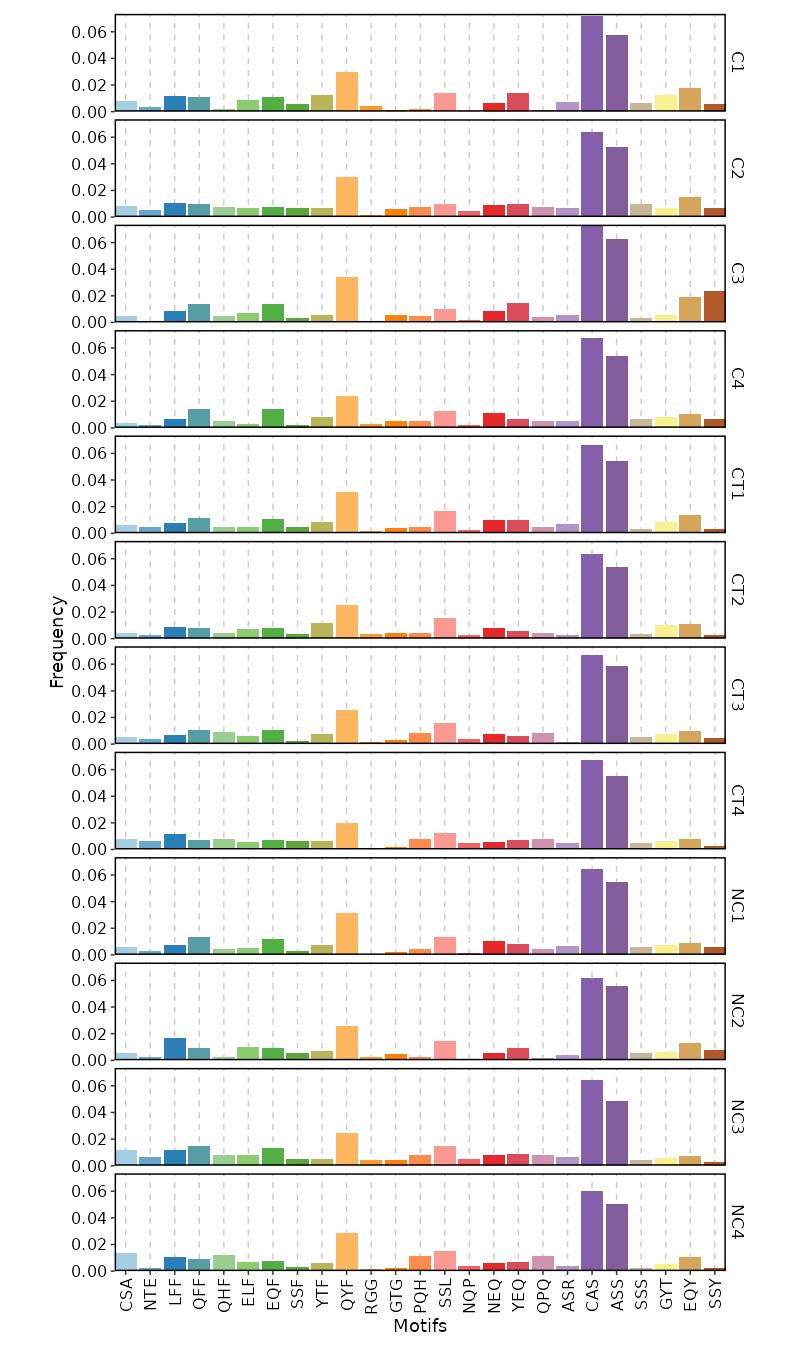

kmers(ns) — Arguments for kmer analysis.- - k (type=int): The length of kmer.

- - head (type=int): The number of top kmers to show.

- - vis_args (type=json): Other arguments for the plotting functions.

- - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot. - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - profiles (ns;order=8): Arguments for sequence profilings.

- method (choice): The method for the position matrix.

For more information see https://en.wikipedia.org/wiki/Position_weight_matrix.

- freq: position frequency matrix (PFM) - a matrix with occurences of each amino acid in each position.

- prob: position probability matrix (PPM) - a matrix with probabilities of each amino acid in each position.

- wei: position weight matrix (PWM) - a matrix with log likelihoods of PPM elements.

- self: self-information matrix (SIM) - a matrix with self-information of elements in PWM.

- vis_args (type=json): Other arguments for the plotting functions.

- devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot.

- cases (type=json): If you have multiple cases, you can use this argument to specify them.

The keys will be the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.kmers.profileswill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.kmers.profiles.method,envs.kmers.profiles.vis_argsandenvs.kmers.profiles.devpars. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be used as the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.kmers.k,envs.kmers.head,envs.kmers.vis_argsandenvs.kmers.devpars.

lens(ns) — Explore clonotype CDR3 lengths.- - by: Groupings when visualize clonotype lengths, passed to the

.byargument ofvis(imm_len, .by = <values>).

Multiple columns should be separated by,. - - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot. - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.lenswill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.lens.by,envs.lens.devpars.

- - by: Groupings when visualize clonotype lengths, passed to the

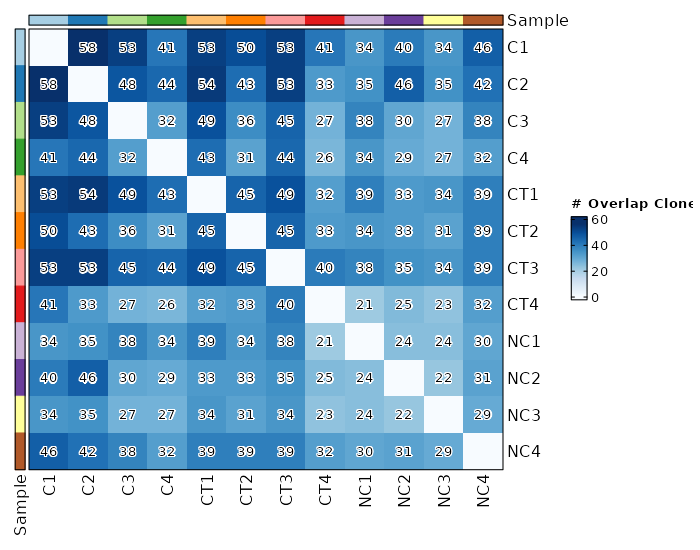

mutaters(type=json;order=-9) — The mutaters passed todplyr::mutate()on expanded cell-level datato add new columns. The keys will be the names of the columns, and the values will be the expressions. The new names can be used involumes,lens,counts,top_clones,rare_clones,hom_clones,gene_usages,divs, etc.overlaps(ns) — Explore clonotype overlaps.- - method (choice): The method to calculate overlaps.

- public: number of public clonotypes between two samples.

- overlap: a normalised measure of overlap similarity.

It is defined as the size of the intersection divided by the smaller of the size of the two sets.

- jaccard: conceptually a percentage of how many objects two sets have in common out of how many objects they have total.

- tversky: an asymmetric similarity measure on sets that compares a variant to a prototype.

- cosine: a measure of similarity between two non-zero vectors of an inner product space that measures the cosine of the angle between them.

- morisita: how many times it is more likely to randomly select two sampled points from the same quadrat (the dataset is

covered by a regular grid of changing size) then it would be in the case of a random distribution generated from

a Poisson process. Duplicate objects are merged with their counts are summed up.

- inc+public: incremental overlaps of the N most abundant clonotypes with incrementally growing N using the public method.

- inc+morisita: incremental overlaps of the N most abundant clonotypes with incrementally growing N using the morisita method. - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - vis_args (type=json): Other arguments for the plotting functions

vis(imm_ov, ...). - - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot. - - analyses (ns;order=8): Perform overlap analyses.

- method: Plot the samples with these dimension reduction methods.

The methods could behclust,tsne,mdsor combination of them, such asmds+hclust.

You can also set tononeto skip the analyses.

They could also be combined, for example,mds+hclust.

See https://immunarch.com/reference/repOverlapAnalysis.html.

- vis_args (type=json): Other arguments for the plotting functions.

- devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot.

- cases (type=json): If you have multiple cases, you can use this argument to specify them.

The keys will be the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.overlaps.analyseswill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.overlaps.analyses.method,envs.overlaps.analyses.vis_argsandenvs.overlaps.analyses.devpars. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.overlapswill be used.

If NO cases are specified, the default case will be added, with the key the default method and the

values ofenvs.overlaps.method,envs.overlaps.vis_args,envs.overlaps.devparsandenvs.overlaps.analyses.

- - method (choice): The method to calculate overlaps.

prefix— The prefix to the barcodes. You can use placeholder like{Sample}_The prefixed barcodes will be used to match the barcodes inin.metafile. Not used ifin.metafileis not specified. IfNone(default),immdata$prefixwill be used.rare_clones(ns) — Explore rare clonotypes.- - by: Groupings when visualize rare clones, passed to the

.byargument ofvis(imm_rare, .by = <values>).

Multiple columns should be separated by,. - - marks (list;itype=int): A numerical vector with ranges of abundance for the rare clonotypes in the dataset.

Passed to the.boundargument ofrepClonoality(). - - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot. - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.rare_cloneswill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.rare_clones.by,envs.rare_clones.marksandenvs.rare_clones.devpars.

- - by: Groupings when visualize rare clones, passed to the

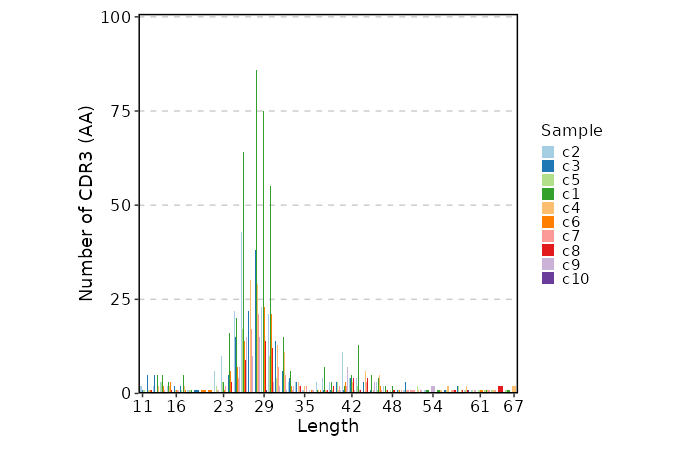

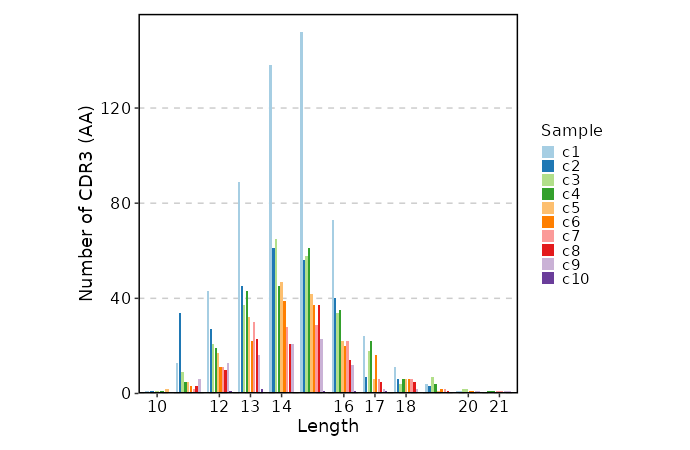

spects(ns) — Spectratyping analysis.- - quant: Select the column with clonal counts to evaluate.

Set toidto count every clonotype once.

Set tocountto take into the account number of clones per clonotype.

Multiple columns should be separated by,. - - col: A string that specifies the column(s) to be processed.

The output is one of the following strings, separated by the plus sign: "nt" for nucleotide sequences,

"aa" for amino acid sequences, "v" for V gene segments, "j" for J gene segments.

E.g., pass "aa+v" for spectratyping on CDR3 amino acid sequences paired with V gene segments,

i.e., in this case a unique clonotype is a pair of CDR3 amino acid and V gene segment.

Clonal counts of equal clonotypes will be summed up. - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.spectswill be used.

By default, aBy_Clonotypecase will be added, with the values ofquant = "id"andcol = "nt", and

aBy_Num_Clonescase will be added, with the values ofquant = "count"andcol = "aa+v".

- - quant: Select the column with clonal counts to evaluate.

top_clones(ns) — Explore top clonotypes.- - by: Groupings when visualize top clones, passed to the

.byargument ofvis(imm_top, .by = <values>).

Multiple columns should be separated by,. - - marks (list;itype=int): A numerical vector with ranges of the top clonotypes. Passed to the

.headargument ofrepClonoality(). - - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot. - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.top_cloneswill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.top_clones.by,envs.top_clones.marksandenvs.top_clones.devpars.

- - by: Groupings when visualize top clones, passed to the

trackings(ns) — Parameters to control the clonotype tracking analysis.- - targets: Either a set of CDR3AA seq of clonotypes to track (separated by

,), or simply an integer to track the top N clonotypes. - - subject_col: The column name in meta data that contains the subjects/samples on the x-axis of the alluvial plot.

If the values in this column are not unique, the values will be merged with the values insubject_colto form the x-axis.

This defaults toSample. - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - subjects (list): A list of values from

subject_colto show in the alluvial plot on the x-axis.

If not specified, all values insubject_colwill be used.

This also specifies the order of the x-axis. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be used as the names of the cases.

The values will be passed to the corresponding arguments (target,subject_col, andsubjects).

If any of these arguments are not specified, the values inenvs.trackingswill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.trackings.target,envs.trackings.subject_col, andenvs.trackings.subjects.

- - targets: Either a set of CDR3AA seq of clonotypes to track (separated by

vj_junc(ns) — Arguments for VJ junction circos plots.This analysis is not included inimmunarch. It is a separate implementation usingcirclize.- - by: Groupings to show VJ usages. Typically, this is the

Samplecolumn, so that the VJ usages are shown for each sample.

But you can also use other columns, such asSubjectto show the VJ usages for each subject.

Multiple columns should be separated by,. - - by_clones (flag): If True, the VJ usages will be calculated based on the distinct clonotypes, instead of the individual cells.

- - subset: Subset the data before plotting VJ usages.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data, which will affect the VJ usages at cell level (by_clones=False). - - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be used as the names of the cases. The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.vj_juncwill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.vj_junc.by,envs.vj_junc.by_clonesenvs.vj_junc.subsetandenvs.vj_junc.devpars.

- - by: Groupings to show VJ usages. Typically, this is the

volumes(ns) — Explore clonotype volume (sizes).- - by: Groupings when visualize clonotype volumes, passed to the

.byargument ofvis(imm_vol, .by = <values>).

Multiple columns should be separated by,. - - devpars (ns): The parameters for the plotting device.

- width (type=int): The width of the plot.

- height (type=int): The height of the plot.

- res (type=int): The resolution of the plot. - - subset: Subset the data before calculating the clonotype volumes.

The whole data will be expanded to cell level, and then subsetted.

Clone sizes will be re-calculated based on the subsetted data. - - cases (type=json;order=9): If you have multiple cases, you can use this argument to specify them.

The keys will be the names of the cases.

The values will be passed to the corresponding arguments above.

If any of these arguments are not specified, the values inenvs.volumeswill be used.

If NO cases are specified, the default case will be added, with the nameDEFAULTand the

values ofenvs.volume.by,envs.volume.devpars.

- - by: Groupings when visualize clonotype volumes, passed to the

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>log(level,msg,*args,logger)— Log message for the process</>run()— Init all other properties and jobs</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously.

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

run()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

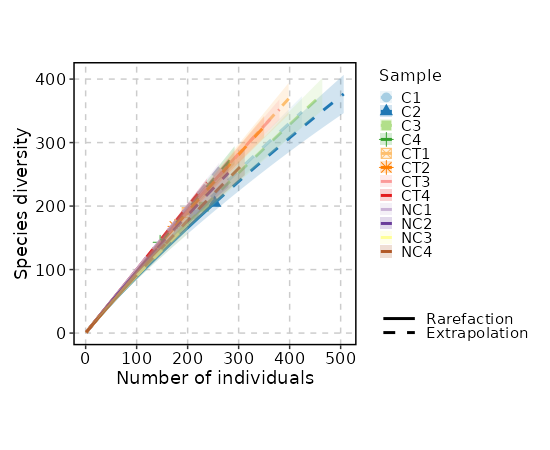

biopipen.ns.tcr.SampleDiversity(*args, **kwds) → Proc

Sample diversity and rarefaction analysis

This is part of Immunarch, in case we have multiple dataset to compare.

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneously.The program entrance for some schedulers may take too much resources when submitting a job or checking the job status. So we may use a smaller number here to limit the simultaneous submissions.template— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

immdata— The data loaded byimmunarch::repLoad()

outdir— The output directory

devpars— The parameters for the plotting deviceIt is a dict, and keys are the methods and values are dicts with width, height and res that will be passed topng()If not provided, 1000, 1000 and 100 will be used.div_methods— Methods to calculate diversitiesIt is a dict, keys are the method names, values are the groupings. Each one is a case, multiple columns for a case are separated by,For example:{"div": ["Status", "Sex", "Status,Sex"]}will run true diversity for samples grouped byStatus,Sex, and both. The diversity for each sample without grouping will also be added anyway. Supported methods:chao1,hill,div,gini.simp,inv.simp,gini, andraref. See also https://immunarch.com/articles/web_only/v6_diversity.html.

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>log(level,msg,*args,logger)— Log message for the process</>run()— Init all other properties and jobs</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously.

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

run()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

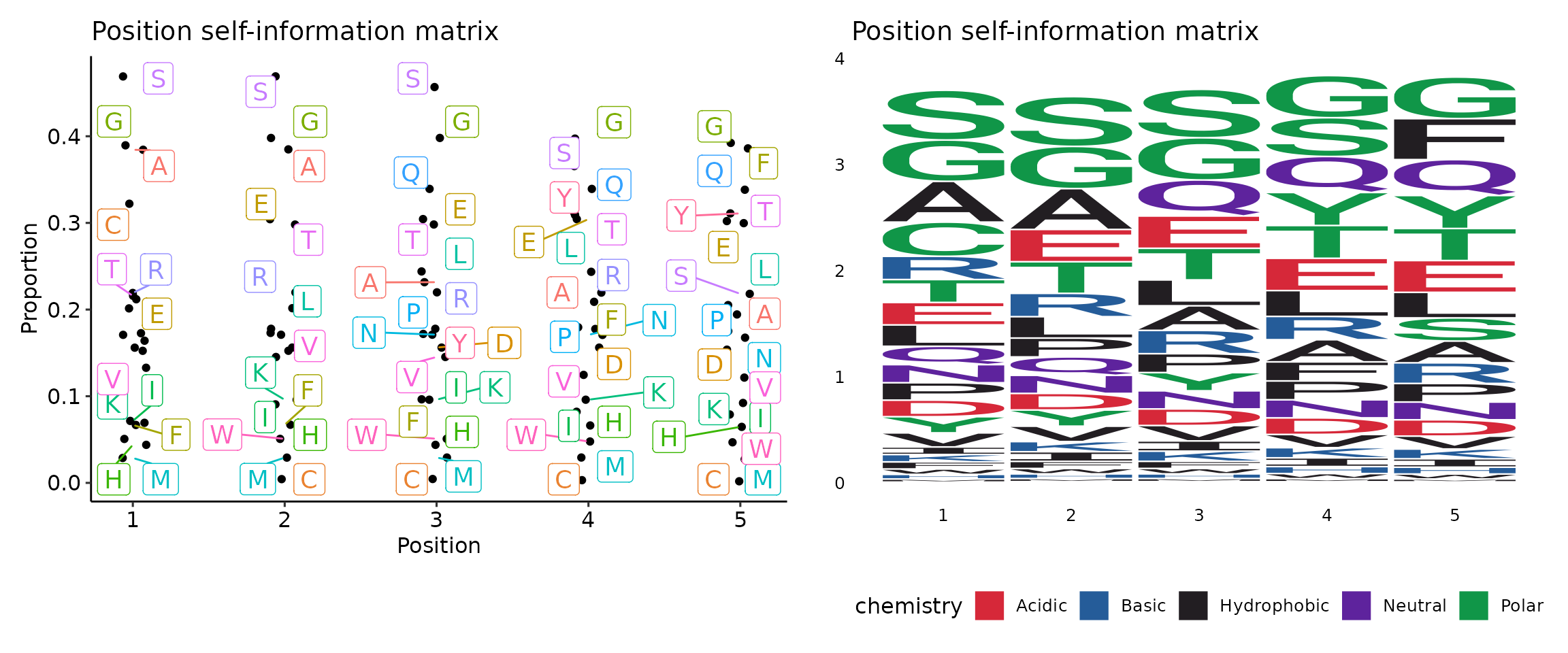

biopipen.ns.tcr.CloneResidency(*args, **kwds) → Proc

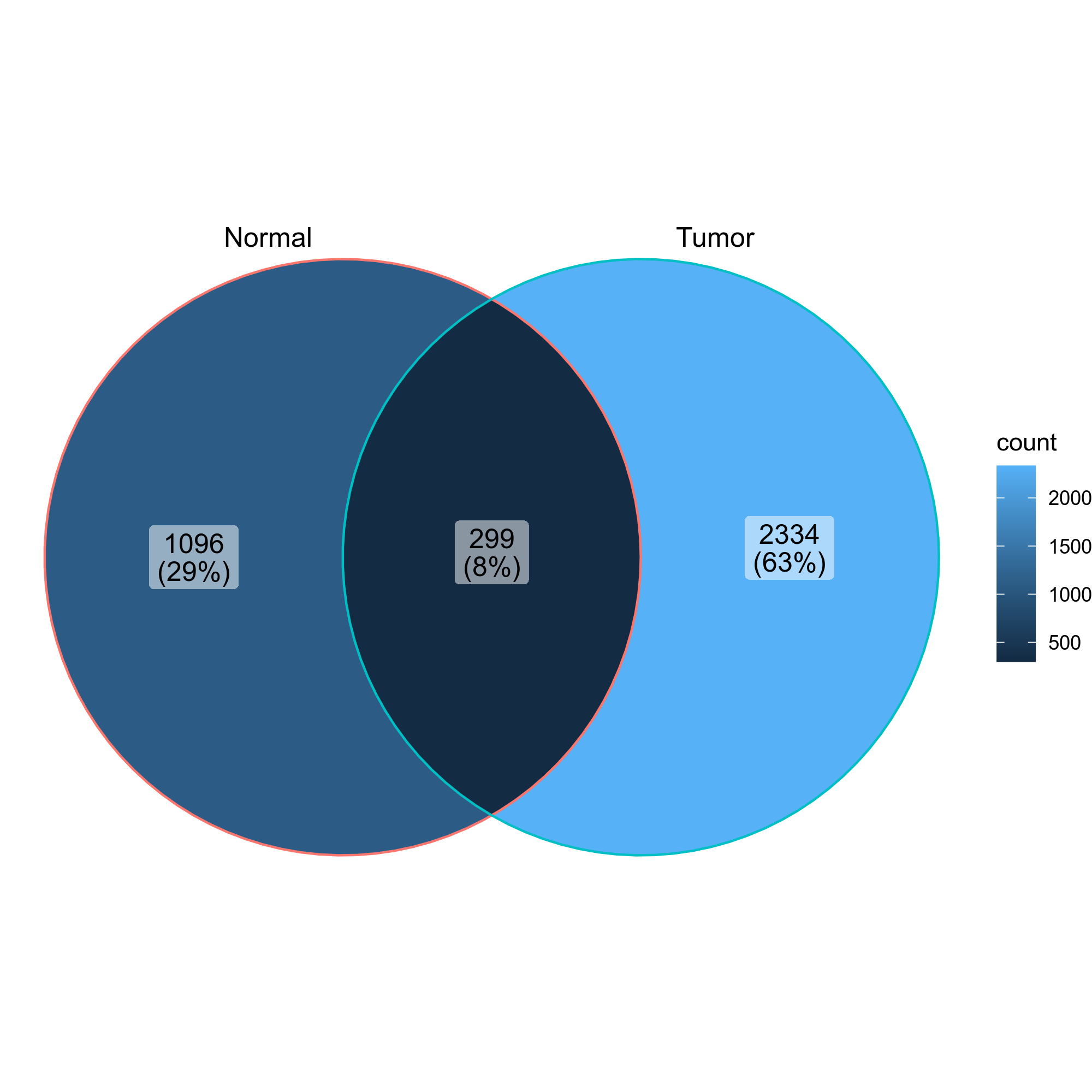

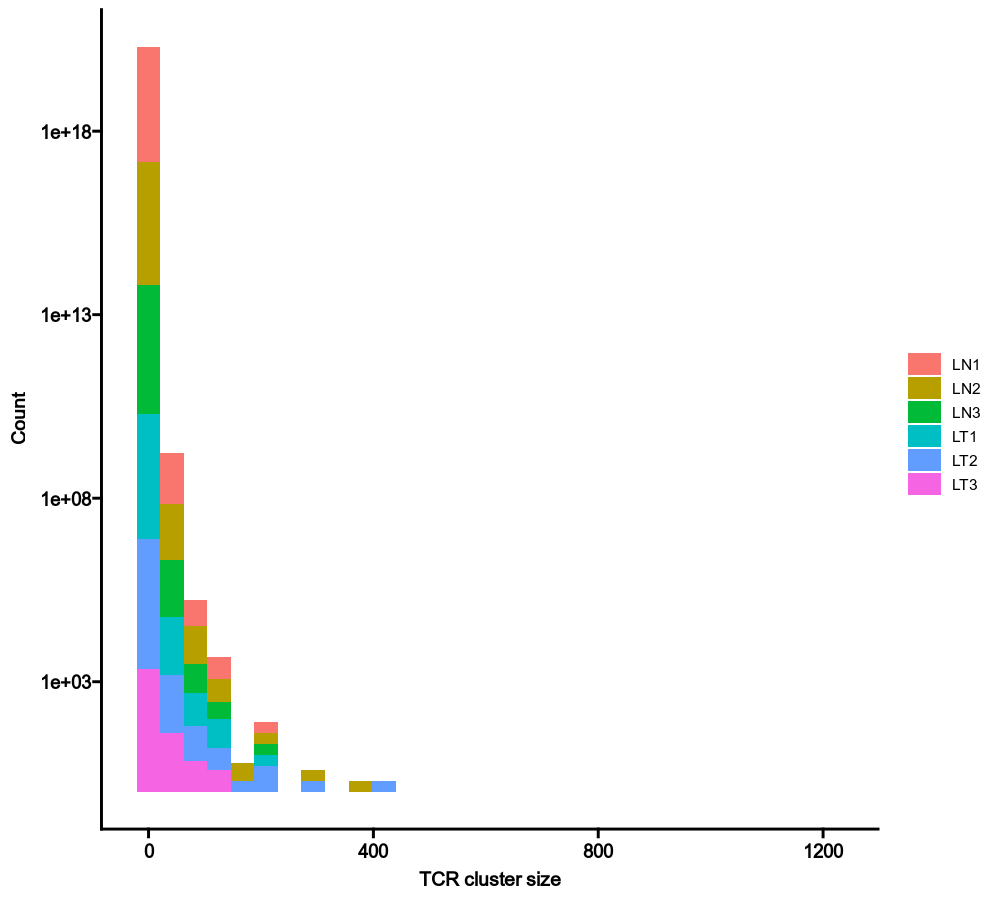

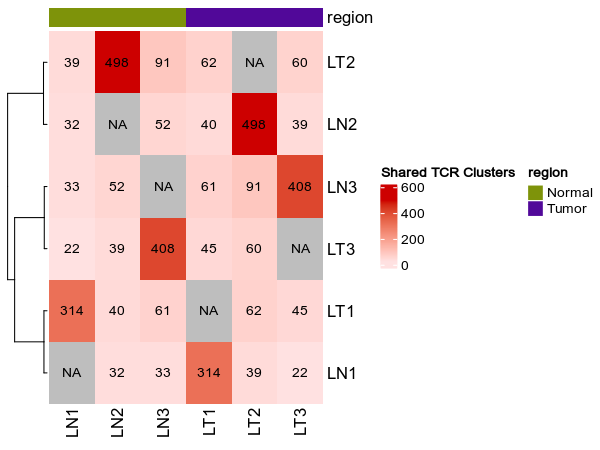

Identification of clone residency

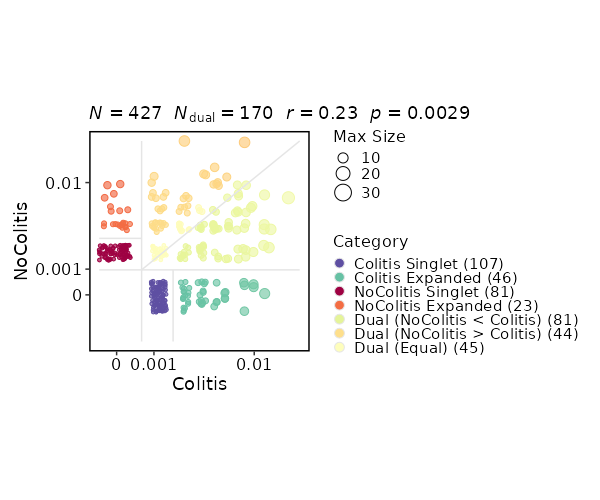

This process is used to investigate the residency of clones in groups, typically two samples (e.g. tumor and normal) from the same patient. But it can be used for any two groups of clones.

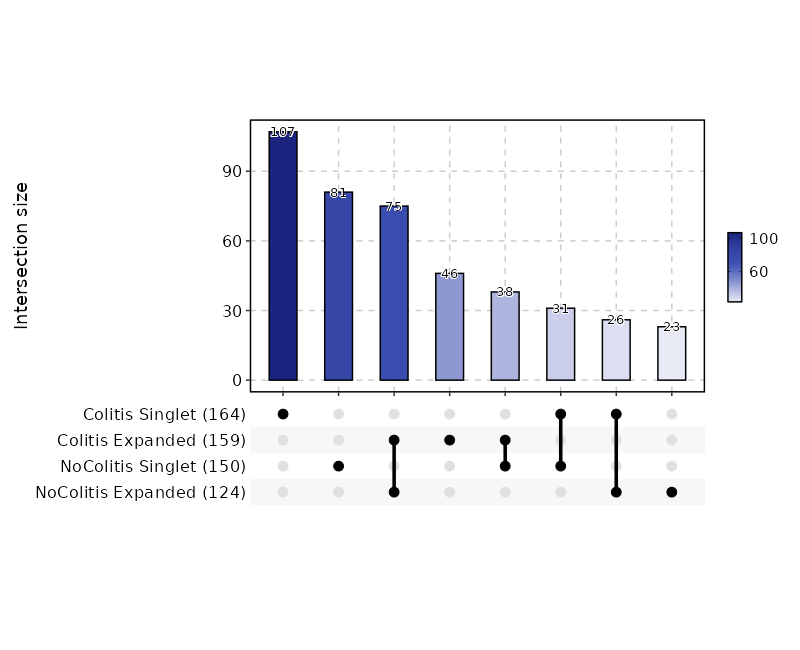

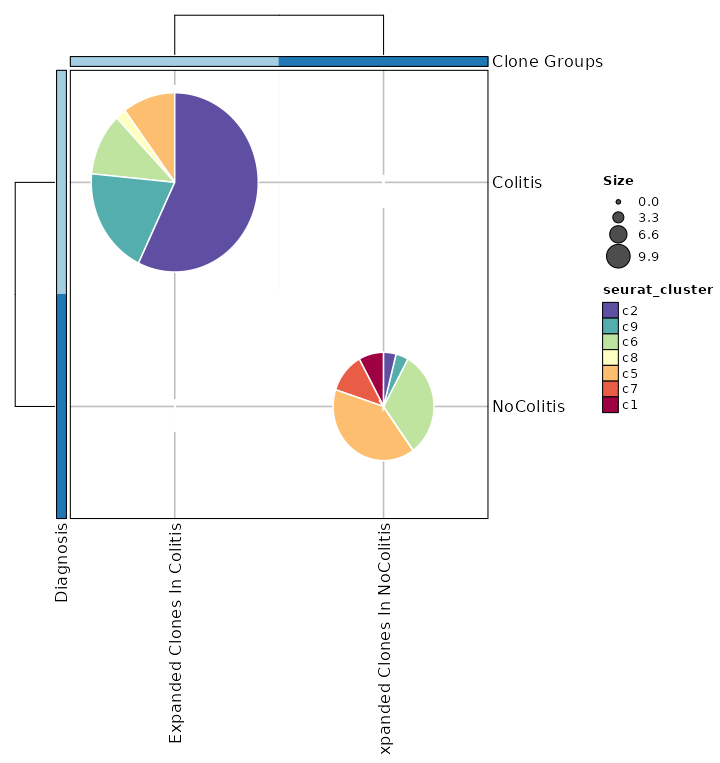

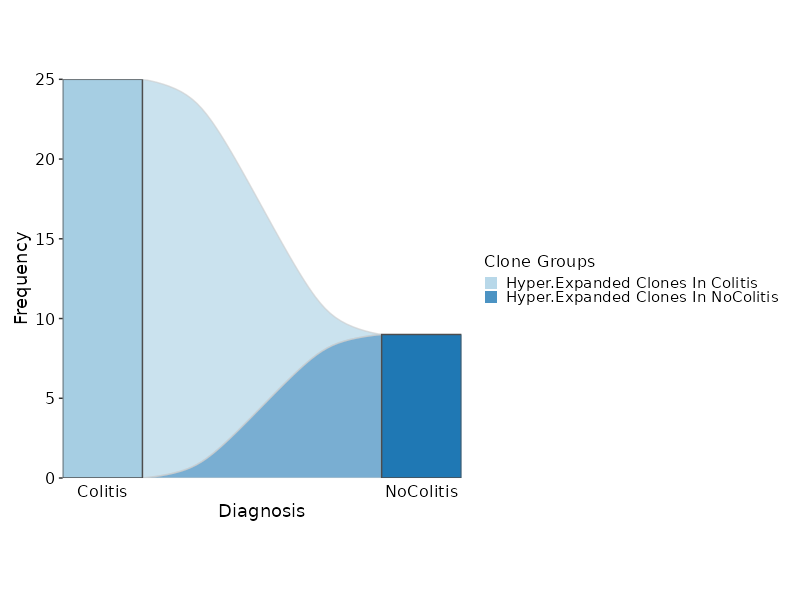

There are three types of output from this process

-

Count tables of the clones in the two groups

CDR3_aa Tumor Normal CASSYGLSWGSYEQYF 306 55 CASSVTGAETQYF 295 37 CASSVPSAHYNEQFF 197 9 ... ... ... -

Residency plots showing the residency of clones in the two groups

The points in the plot are jittered to avoid overplotting. The x-axis is the residency in the first group and the y-axis is the residency in the second group. The size of the points are relative to the normalized size of the clones. You may identify different types of clones in the plot based on their residency in the two groups:

- Collapsed (The clones that are collapsed in the second group)

- Dual (The clones that are present in both groups with equal size)

- Expanded (The clones that are expanded in the second group)

- First Group Multiplet (The clones only in the First Group with size > 1)

- Second Group Multiplet (The clones only in the Second Group with size > 1)

- First Group Singlet (The clones only in the First Group with size = 1)

- Second Group Singlet (The clones only in the Second Group with size = 1)

This idea is borrowed from this paper:

-

Venn diagrams showing the overlap of the clones in the two groups

{: width="60%"}

{: width="60%"}

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneously.The program entrance for some schedulers may take too much resources when submitting a job or checking the job status. So we may use a smaller number here to limit the simultaneous submissions.template— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

immdata— The data loaded byimmunarch::repLoad()metafile— A cell-level metafile, where the first column must be the cell barcodesthat match the cell barcodes inimmdata. The other columns can be any metadata that you want to use for the analysis. The loaded metadata will be left-joined to the converted cell-level data fromimmdata. This can also be a Seurat object RDS file. If so, thesobj@meta.datawill be used as the metadata.

outdir— The output directory

cases(type=json) — If you have multiple cases, you can use this argumentto specify them. The keys will be used as the names of the cases. The values will be passed to the corresponding arguments. If no cases are specified, the default case will be added, with the nameDEFAULTand the values ofenvs.subject,envs.group,envs.orderandenvs.section. These values are also the defaults for the other cases.group— The key of group in metadata. This usually marks the samplesthat you want to compare. For example, Tumor vs Normal, post-treatment vs baseline It doesn't have to be 2 groups always. If there are more than 3 groups, instead of venn diagram, upset plots will be used.mutaters(type=json) — The mutaters passed todplyr::mutate()onthe cell-level data converted fromin.immdata. Ifin.metafileis provided, the mutaters will be applied to the joined data. The keys will be the names of the new columns, and the values will be the expressions. The new names can be used insubject,group,orderandsection.order(list) — The order of the values ingroup. In scatter/residency plots,XinX,Ywill be used as x-axis andYwill be used as y-axis. You can also have multiple orders. For example:["X,Y", "X,Z"]. If you only have two groups, you can setorder = ["X", "Y"], which will be the same asorder = ["X,Y"].prefix— The prefix of the cell barcodes in theSeuratobject.section— How the subjects aligned in the report. Multiple subjects withthe same value will be grouped together. Useful for cohort with large number of samples.subject(list) — The key of subject in metadata. The cloneresidency will be examined for this subject/patientsubset— The filter passed todplyr::filter()to filter the data for the cellsbefore calculating the clone residency. For example,Clones > 1to filter out singletons.upset_trans— The transformation to apply to the y axis of upset bar plots.For example,log10orsqrt. If not specified, the y axis will be plotted as is. Note that the position of the bar plots will be dodged instead of stacked when the transformation is applied. See also https://github.com/tidyverse/ggplot2/issues/3671upset_ymax— The maximum value of the y-axis in the upset bar plots.

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>log(level,msg,*args,logger)— Log message for the process</>run()— Init all other properties and jobs</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously.

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

run()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

biopipen.ns.tcr.Immunarch2VDJtools(*args, **kwds) → Proc

Convert immuarch format into VDJtools input formats.

This process converts the immunarch object to the

VDJtools input files,

in order to perform the VJ gene usage analysis by

VJUsage process.

This process will generally generate a tab-delimited file for each sample, with the following columns.

count: The number of reads for this clonotypefrequency: The frequency of this clonotypeCDR3nt: The nucleotide sequence of the CDR3 regionCDR3aa: The amino acid sequence of the CDR3 regionV: The V geneD: The D geneJ: The J gene

See also: https://vdjtools-doc.readthedocs.io/en/master/input.html#vdjtools-format.

This process has no environment variables.

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself