biopipen.ns.scrna

Tools to analyze single-cell RNA

SeuratLoading(Proc) — Seurat - Loading data</>SeuratPreparing(Proc) — Load, prepare and apply QC to data, usingSeurat</>SeuratClustering(Proc) — Determine the clusters of cells without reference using Seurat FindClustersprocedure. </>SeuratSubClustering(Proc) — Find clusters of a subset of cells.</>SeuratClusterStats(Proc) — Statistics of the clustering.</>ModuleScoreCalculator(Proc) — Calculate the module scores for each cell</>CellsDistribution(Proc) — Distribution of cells (i.e. in a TCR clone) from different groupsfor each cluster </>SeuratMetadataMutater(Proc) — Mutate the metadata of the seurat object</>DimPlots(Proc) — Seurat - Dimensional reduction plots</>MarkersFinder(Proc) — Find markers between different groups of cells</>TopExpressingGenes(Proc) — Find the top expressing genes in each cluster</>ExprImputation(Proc) — This process imputes the dropout values in scRNA-seq data.</>SCImpute(Proc) — Impute the dropout values in scRNA-seq data.</>SeuratFilter(Proc) — Filtering cells from a seurat object</>SeuratSubset(Proc) — Subset a seurat object into multiple seruat objects</>SeuratSplit(Proc) — Split a seurat object into multiple seruat objects</>Subset10X(Proc) — Subset 10X data, mostly used for testing</>SeuratTo10X(Proc) — Write a Seurat object to 10X format</>ScFGSEA(Proc) — Gene set enrichment analysis for cells in different groups usingfgsea</>CellTypeAnnotation(Proc) — Annotate the cell clusters. Currently, four ways are supported:</>SeuratMap2Ref(Proc) — Map the seurat object to reference</>RadarPlots(Proc) — Radar plots for cell proportion in different clusters.</>MetaMarkers(Proc) — Find markers between three or more groups of cells, using one-way ANOVAor Kruskal-Wallis test. </>Seurat2AnnData(Proc) — Convert seurat object to AnnData</>AnnData2Seurat(Proc) — Convert AnnData to seurat object</>ScSimulation(Proc) — Simulate single-cell data using splatter.</>CellCellCommunication(Proc) — Cell-cell communication inference</>CellCellCommunicationPlots(Proc) — Visualization for cell-cell communication inference.</>ScVelo(Proc) — Velocity analysis for single-cell RNA-seq data</>Slingshot(Proc) — Trajectory inference using Slingshot</>LoomTo10X(Proc) — Convert Loom file to 10X format</>PseudoBulkDEG(Proc) — Pseduo-bulk differential gene expression analysis</>CellSNPLite(Proc) — Genotyping bi-allelic SNPs on single cells using cellsnp-lite.</>MQuad(Proc) — Clonal substructure discovery using single cell mitochondrial variants with MQuad.</>MQuadMerge(Proc) — Merge multiple MQuad results for multiple samples.</>VireoSNP(Proc) — Demultiplexing of single-cell RNA-seq data using vireoSNP.</>

biopipen.ns.scrna.SeuratLoading(*args, **kwds) → Proc

Seurat - Loading data

Deprecated, should be superseded by SeuratPreparing

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneously.The program entrance for some schedulers may take too much resources when submitting a job or checking the job status. So we may use a smaller number here to limit the simultaneous submissions.template— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

metafile— The metadata of the samplesA tab-delimited file Two columns are required:- -

Sampleto specify the sample names. - -

RNADatato assign the path of the data to the samples

The path will be read byRead10X()fromSeurat

- -

rdsfile— The RDS file with a list of Seurat object

qc— The QC filter for each sample.This will be passed tosubset(obj, subset=<qc>). For examplenFeature_RNA > 200 & nFeature_RNA < 2500 & percent.mt < 5

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>log(level,msg,*args,logger)— Log message for the process</>run()— Init all other properties and jobs</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously.

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

run()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

biopipen.ns.scrna.SeuratPreparing(*args, **kwds) → Proc

Load, prepare and apply QC to data, using Seurat

This process will -

- - Prepare the seurat object

- - Apply QC to the data

- - Integrate the data from different samples

See also

- - https://satijalab.org/seurat/articles/pbmc3k_tutorial.html#standard-pre-processing-workflow-1)

- - https://satijalab.org/seurat/articles/integration_introduction

This process will read the scRNA-seq data, based on the information provided bySampleInfo, specifically, the paths specified by the RNAData column.

Those paths should be either paths to directoies containing matrix.mtx,

barcodes.tsv and features.tsv files that can be loaded by

Seurat::Read10X(),

or paths of loom files that can be loaded by SeuratDisk::LoadLoom(), or paths to

h5 files that can be loaded by

Seurat::Read10X_h5().

Each sample will be loaded individually and then merged into one Seurat object, and then perform QC.

In order to perform QC, some additional columns are added to the meta data of the Seurat object. They are:

precent.mt: The percentage of mitochondrial genes.percent.ribo: The percentage of ribosomal genes.precent.hb: The percentage of hemoglobin genes.percent.plat: The percentage of platelet genes.

For integration, two routes are available:

- Performing integration on datasets normalized with

SCTransform - Using

NormalizeDataandFindIntegrationAnchors

/// Note

When using SCTransform, the default Assay will be set to SCT in output, rather than RNA.

If you are using cca or rpca interation, the default assay will be integrated.

///

/// Note

From biopipen v0.23.0, this requires Seurat v5.0.0 or higher.

///

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneously.The program entrance for some schedulers may take too much resources when submitting a job or checking the job status. So we may use a smaller number here to limit the simultaneous submissions.template— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

metafile— The metadata of the samplesA tab-delimited file Two columns are required:Sampleto specify the sample names.RNADatato assign the path of the data to the samples The path will be read byRead10X()fromSeurat, or the path to the h5 file that can be read byRead10X_h5()fromSeurat. It can also be an RDS or qs2 file containing aSeuratobject. Note that it must has a column namedSamplein the meta.data to specify the sample names.

outfile— The qs2 file with the Seurat object with all samples integrated.Note that the cell ids are prefixied with sample names.

DoubletFinder(ns) — Arguments to runDoubletFinder.See also https://demultiplexing-doublet-detecting-docs.readthedocs.io/en/latest/DoubletFinder.html.- - PCs (type=int): Number of PCs to use for 'doubletFinder' function.

- - doublets (type=float): Number of expected doublets as a proportion of the pool size.

- - pN (type=float): Number of doublets to simulate as a proportion of the pool size.

- - ncores (type=int): Number of cores to use for

DoubletFinder::paramSweep.

Set toNoneto useenvs.ncores.

Since parallelization of the function usually exhausts memory, if bigenvs.ncoresdoes not work

forDoubletFinder, set this to a smaller number.

FindVariableFeatures(ns) — Arguments forFindVariableFeatures().objectis specified internally, and-in the key will be replaced with..IntegrateLayers(ns) — Arguments forIntegrateLayers().objectis specified internally, and-in the key will be replaced with.. Whenuse_sctisTrue,normalization-methoddefaults toSCT.- - method (choice): The method to use for integration.

- CCAIntegration: UseSeurat::CCAIntegration.

- CCA: Same asCCAIntegration.

- cca: Same asCCAIntegration.

- RPCAIntegration: UseSeurat::RPCAIntegration.

- RPCA: Same asRPCAIntegration.

- rpca: Same asRPCAIntegration.

- HarmonyIntegration: UseSeurat::HarmonyIntegration.

- Harmony: Same asHarmonyIntegration.

- harmony: Same asHarmonyIntegration.

- FastMNNIntegration: UseSeurat::FastMNNIntegration.

- FastMNN: Same asFastMNNIntegration.

- fastmnn: Same asFastMNNIntegration.

- scVIIntegration: UseSeurat::scVIIntegration.

- scVI: Same asscVIIntegration.

- scvi: Same asscVIIntegration. - -

: See https://satijalab.org/seurat/reference/integratelayers

- - method (choice): The method to use for integration.

NormalizeData(ns) — Arguments forNormalizeData().objectis specified internally, and-in the key will be replaced with..RunPCA(ns) — Arguments forRunPCA().objectandfeaturesis specified internally, and-in the key will be replaced with..- - npcs (type=int): The number of PCs to compute.

For each sample,npcswill be no larger than the number of columns - 1. - -

: See https://satijalab.org/seurat/reference/runpca

- - npcs (type=int): The number of PCs to compute.

SCTransform(ns) — Arguments forSCTransform().objectis specified internally, and-in the key will be replaced with..- - return-only-var-genes: Whether to return only variable genes.

- - min_cells: The minimum number of cells that a gene must be expressed in to be kept.

A hidden argument ofSCTransformto filter genes.

If you try to keep all genes in theRNAassay, you can setmin_cellsto0and

return-only-var-genestoFalse.

See https://github.com/satijalab/seurat/issues/3598#issuecomment-715505537 - -

: See https://satijalab.org/seurat/reference/sctransform

ScaleData(ns) — Arguments forScaleData().objectandfeaturesis specified internally, and-in the key will be replaced with..cache(type=auto) — Whether to cache the information at different steps.IfTrue, the seurat object will be cached in the job output directory, which will be not cleaned up when job is rerunning. The cached seurat object will be saved as<signature>.<kind>.RDSfile, where<signature>is the signature determined by the input and envs of the process. See https://github.com/satijalab/seurat/issues/7849, https://github.com/satijalab/seurat/issues/5358 and https://github.com/satijalab/seurat/issues/6748 for more details also about reproducibility issues. To not use the cached seurat object, you can either setcachetoFalseor delete the cached file at<signature>.RDSin the cache directory.cell_qc— Filter expression to filter cells, usingtidyrseurat::filter(). It can also be a dictionary of expressions, where the names of the list are sample names. You can have a default expression in the list with the name "DEFAULT" for the samples that are not listed. Available QC keys includenFeature_RNA,nCount_RNA,percent.mt,percent.ribo,percent.hb, andpercent.plat.

/// Tip | Example Including the columns added above, all available QC keys includenFeature_RNA,nCount_RNA,percent.mt,percent.ribo,percent.hb, andpercent.plat. For example:

will keep cells with more than 200 genes and less than 5%% mitochondrial genes. ///[SeuratPreparing.envs] cell_qc = "nFeature_RNA > 200 & percent.mt < 5"doublet_detector(choice) — The doublet detector to use.- - none: Do not use any doublet detector.

- - DoubletFinder: Use

DoubletFinderto detect doublets. - - doubletfinder: Same as

DoubletFinder. - - scDblFinder: Use

scDblFinderto detect doublets. - - scdblfinder: Same as

scDblFinder.

gene_qc(ns) — Filter genes.gene_qcis applied aftercell_qc.- - min_cells: The minimum number of cells that a gene must be

expressed in to be kept. - - excludes: The genes to exclude. Multiple genes can be specified by

comma separated values, or as a list.

will keep genes that are expressed in at least 3 cells. ///[SeuratPreparing.envs] gene_qc = { min_cells = 3 }- - min_cells: The minimum number of cells that a gene must be

min_cells(type=int) — The minimum number of cells that a gene must beexpressed in to be kept. This is used inSeurat::CreateSeuratObject(). Futher QC (envs.cell_qc,envs.gene_qc) will be performed after this. It doesn't work when data is loaded from loom files or RDS/qs2 files.min_features(type=int) — The minimum number of features that a cell mustexpress to be kept. This is used inSeurat::CreateSeuratObject(). Futher QC (envs.cell_qc,envs.gene_qc) will be performed after this. It doesn't work when data is loaded from loom files or RDS/qs2 files.mutaters(type=json) — The mutaters to mutate the metadata to the cells.These new columns will be added to the metadata of the Seurat object and will be saved in the output file.ncores(type=int) — Number of cores to use.Used infuture::plan(strategy = "multicore", workers = <ncores>)to parallelize some Seurat procedures.no_integration(flag) — Whether to skip integration or not.qc_plots(type=json) — The plots for QC metrics.It should be a json (or python dict) with the keys as the names of the plots and the values also as dicts with the following keys:- * kind: The kind of QC. Either

geneorcell(default). - * devpars: The device parameters for the plot. A dict with

res,height, andwidth. - * more_formats: The formats to save the plots other than

png. - * save_code: Whether to save the code to reproduce the plot.

- * other arguments passed to

biopipen.utils::VizSeuratCellQCwhenkindiscellorbiopipen.utils::VizSeuratGeneQCwhenkindisgene.- * kind: The kind of QC. Either

scDblFinder(ns) — Arguments to runscDblFinder.- - dbr (type=float): The expected doublet rate.

- - ncores (type=int): Number of cores to use for

scDblFinder.

Set toNoneto useenvs.ncores. - -

: See https://rdrr.io/bioc/scDblFinder/man/scDblFinder.html.

use_sct(flag) — Whether use SCTransform routine to integrate samples or not.Before the following procedures, theRNAlayer will be split by samples.

IfFalse, following procedures will be performed in the order: See https://satijalab.org/seurat/articles/seurat5_integration#layers-in-the-seurat-v5-objectand https://satijalab.org/seurat/articles/pbmc3k_tutorial.html

IfTrue, following procedures will be performed in the order:- *

SCTransform.

- *

r-bracer—- check: {{proc.lang}} <(echo "library(bracer)")

r-future—- check: {{proc.lang}} <(echo "library(future)")

r-seurat—- check: {{proc.lang}} <(echo "library(Seurat)")

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>log(level,msg,*args,logger)— Log message for the process</>run()— Init all other properties and jobs</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously.

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

run()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

biopipen.ns.scrna.SeuratClustering(*args, **kwds) → Proc

Determine the clusters of cells without reference using Seurat FindClustersprocedure.

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneously.The program entrance for some schedulers may take too much resources when submitting a job or checking the job status. So we may use a smaller number here to limit the simultaneous submissions.template— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

srtobj— The seurat object loaded by SeuratPreparing

outfile— The seurat object with cluster information atseurat_clustersorthe name specified byenvs.ident

FindClusters(ns) — Arguments forFindClusters().objectis specified internally, and-in the key will be replaced with.. The cluster labels will be saved in cluster names and prefixed with "c". The first cluster will be "c1", instead of "c0".- - resolution (type=auto): The resolution of the clustering. You can have multiple resolutions as a list or as a string separated by comma.

Ranges are also supported, for example:0.1:0.5:0.1will generate0.1, 0.2, 0.3, 0.4, 0.5. The step can be omitted, defaulting to 0.1.

The results will be saved in<ident>_<resolution>.

The final resolution will be used to define the clusters at<ident>. - -

: See https://satijalab.org/seurat/reference/findclusters

- - resolution (type=auto): The resolution of the clustering. You can have multiple resolutions as a list or as a string separated by comma.

FindNeighbors(ns) — Arguments forFindNeighbors().objectis specified internally, and-in the key will be replaced with..- - reduction: The reduction to use.

If not provided,sobj@misc$integrated_new_reductionwill be used. - -

: See https://satijalab.org/seurat/reference/findneighbors

- - reduction: The reduction to use.

RunPCA(ns) — Arguments forRunPCA().RunUMAP(ns) — Arguments forRunUMAP().objectis specified internally, and-in the key will be replaced with..dims=Nwill be expanded todims=1:N; The maximal value ofNwill be the minimum ofNand the number of columns - 1 for each sample. You can also specifyfeaturesinstead ofdimsto use specific features for UMAP. It can be a list with the following fields:order(the order of the markers to use for UMAP, e.g. "desc(abs(avg_log2FC))", andn(the number of total features to use for UMAP, e.g. 30). Iffeaturesis a list, it will runbiopipen.utils::RunSeuratDEAnalysisto get the markers for each group, and then select the topn/ngroupsfeatures for each group based on theorderfield. Iffeaturesis a numeric value, it will be treated as thenfield in the list above, with the defaultorderbeing "desc(abs(avg_log2FC))".- - dims (type=int): The number of PCs to use

- - reduction: The reduction to use for UMAP.

If not provided,sobj@misc$integrated_new_reductionwill be used. - -

: See https://satijalab.org/seurat/reference/runumap

cache(type=auto) — Where to cache the information at different steps.IfTrue, the seurat object will be cached in the job output directory, which will be not cleaned up when job is rerunning. Set toFalseto not cache the results.ident— The name in the metadata to save the cluster labels.A shortcut forenvs["FindClusters"]["cluster.name"].ncores(type=int;order=-100) — Number of cores to use.Used infuture::plan(strategy = "multicore", workers = <ncores>)to parallelize some Seurat procedures. See also: https://satijalab.org/seurat/articles/future_vignette.html

r-dplyr—- check: {{proc.lang}} <(echo "library(dplyr)")

r-seurat—- check: {{proc.lang}} <(echo "library(Seurat)")

r-tidyr—- check: {{proc.lang}} <(echo "library(tidyr)")

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>log(level,msg,*args,logger)— Log message for the process</>run()— Init all other properties and jobs</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously.

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

run()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

biopipen.ns.scrna.SeuratSubClustering(*args, **kwds) → Proc

Find clusters of a subset of cells.

It's unlike [Seurat::FindSubCluster], which only finds subclusters of a single

cluster. Instead, it will perform the whole clustering procedure on the subset of

cells. One can use metadata to specify the subset of cells to perform clustering on.

For the subset of cells, the reductions will be re-performed on the subset of cells,

and then the clustering will be performed on the subset of cells. The reduction

will be saved in object@reduction$<casename>.<reduction> of the original object and the

clustering will be saved in the metadata of the original object using the casename

as the column name.

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneously.The program entrance for some schedulers may take too much resources when submitting a job or checking the job status. So we may use a smaller number here to limit the simultaneous submissions.template— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

srtobj— The seurat object in RDS or qs/qs2 format.

outfile— The seurat object with the subclustering information in qs/qs2 format.

FindClusters(ns) — Arguments forFindClusters().objectis specified internally, and-in the key will be replaced with.. The cluster labels will be prefixed with "s". The first cluster will be "s1", instead of "s0".- - resolution (type=auto): The resolution of the clustering. You can have multiple resolutions as a list or as a string separated by comma.

Ranges are also supported, for example:0.1:0.5:0.1will generate0.1, 0.2, 0.3, 0.4, 0.5. The step can be omitted, defaulting to 0.1.

The results will be saved in<casename>_<resolution>.

The final resolution will be used to define the clusters at<casename>. - -

: See https://satijalab.org/seurat/reference/findclusters

- - resolution (type=auto): The resolution of the clustering. You can have multiple resolutions as a list or as a string separated by comma.

FindNeighbors(ns) — Arguments forFindNeighbors().objectis specified internally, and-in the key will be replaced with..- - reduction: The reduction to use.

If not provided,object@misc$integrated_new_reductionwill be used. - -

: See https://satijalab.org/seurat/reference/findneighbors

- - reduction: The reduction to use.

RunPCA(ns) — Arguments forRunPCA().objectis specified internally as the subset object, and-in the key will be replaced with..RunUMAP(ns) — Arguments forRunUMAP().objectis specified internally as the subset object, and-in the key will be replaced with..dims=Nwill be expanded todims=1:N; The maximal value ofNwill be the minimum ofNand the number of columns - 1 for each sample. You can also specifyfeaturesinstead ofdimsto use specific features for UMAP. It can be a list with the following fields:order(the order of the markers to use for UMAP, e.g. "desc(abs(avg_log2FC))", andn(the number of total features to use for UMAP, e.g. 30). Iffeaturesis a list, it will runbiopipen.utils::RunSeuratDEAnalysisto get the markers for each group, and then select the topn/ngroupsfeatures for each group based on theorderfield. Iffeaturesis a numeric value, it will be treated as thenfield in the list above, with the defaultorderbeing "desc(abs(avg_log2FC))".- - dims (type=int): The number of PCs to use

- - reduction: The reduction to use for UMAP.

If not provided,sobj@misc$integrated_new_reductionwill be used. - -

: See https://satijalab.org/seurat/reference/runumap

cache(type=auto) — Whether to cache the results.IfTrue, the seurat object will be cached in the job output directory, which will be not cleaned up when job is rerunning. Set toFalseto not cache the results.cases(type=json) — The cases to perform subclustering.Keys are the names of the cases and values are the dicts inherited fromenvsexceptmutatersandcache. If empty, a case with namesubclusterwill be created with default parameters. The case name will be passed tobiopipen.utils::SeuratSubCluster()asname. It will be used as the prefix for the reduction name, keys and cluster names. For reduction keys, it will betoupper(<name>)+ "PC_" andtoupper(<name>)+ "UMAP_". For cluster names, it will be<name>+ "." + resolution. And the final cluster name will be<name>. Note that thenameshould be alphanumeric and anything other than alphanumeric will be removed.mutaters(type=json) — The mutaters to mutate the metadata to subset the cells.The mutaters will be applied in the order specified.ncores(type=int;order=-100) — Number of cores to use.Used infuture::plan(strategy = "multicore", workers = <ncores>)to parallelize some Seurat procedures.subset— An expression to subset the cells, will be passed totidyseurat::filter().

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>log(level,msg,*args,logger)— Log message for the process</>run()— Init all other properties and jobs</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously.

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

run()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

biopipen.ns.scrna.SeuratClusterStats(*args, **kwds) → Proc

Statistics of the clustering.

Including the number/fraction of cells in each cluster, the gene expression values and dimension reduction plots. It's also possible to perform stats on TCR clones/clusters or other metadata for each T-cell cluster.

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneously.The program entrance for some schedulers may take too much resources when submitting a job or checking the job status. So we may use a smaller number here to limit the simultaneous submissions.template— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

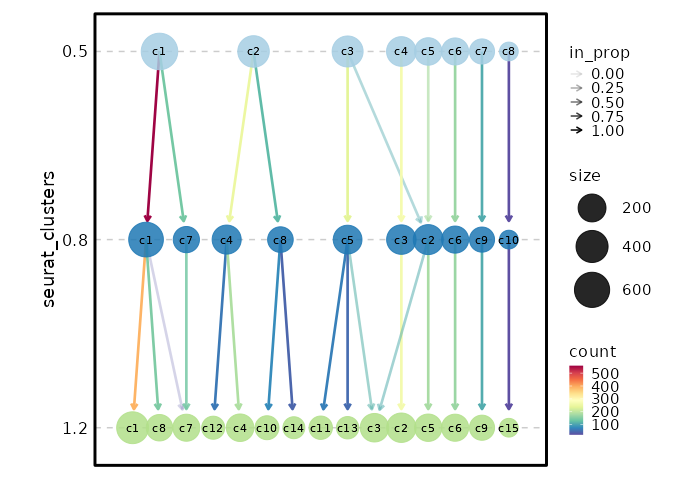

Clustree Plot

[SeuratClusterStats.envs.clustrees."Clustree Plot"]

prefix = "seurat_clusters"

devpars = {height = 500}

{: width="80%" }

{: width="80%" }

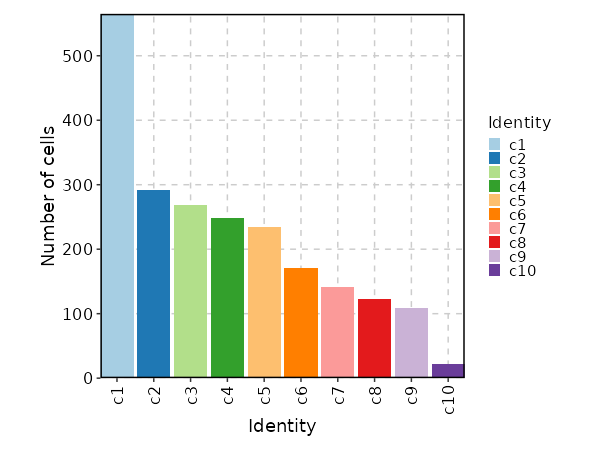

Number of cells in each cluster (Bar Chart)

[SeuratClusterStats.envs.stats."Number of cells in each cluster (Bar Chart)"]

plot_type = "bar"

x_text_angle = 90

{: width="80%" }

{: width="80%" }

Number of cells in each cluster by Sample (Bar Chart)

[SeuratClusterStats.envs.stats."Number of cells in each cluster by Sample (Bar Chart)"]

plot_type = "bar"

group_by = "Sample"

x_text_angle = 90

{: width="80%" }

{: width="80%" }

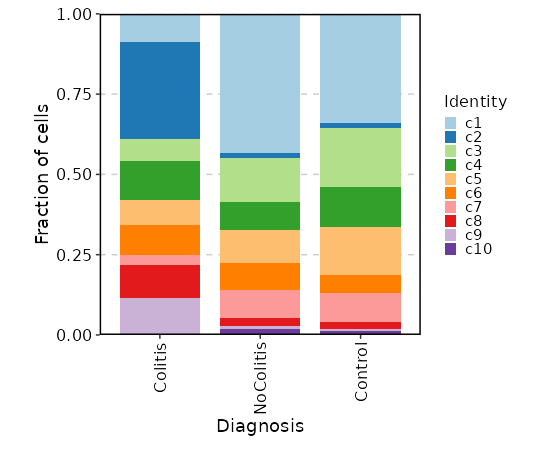

Number of cells in each cluster by Diagnosis

[SeuratClusterStats.envs.stats."Number of cells in each cluster by Diagnosis"]

plot_type = "bar"

group_by = "Diagnosis"

frac = "group"

x_text_angle = 90

swap = true

position = "stack"

{: width="80%" }

{: width="80%" }

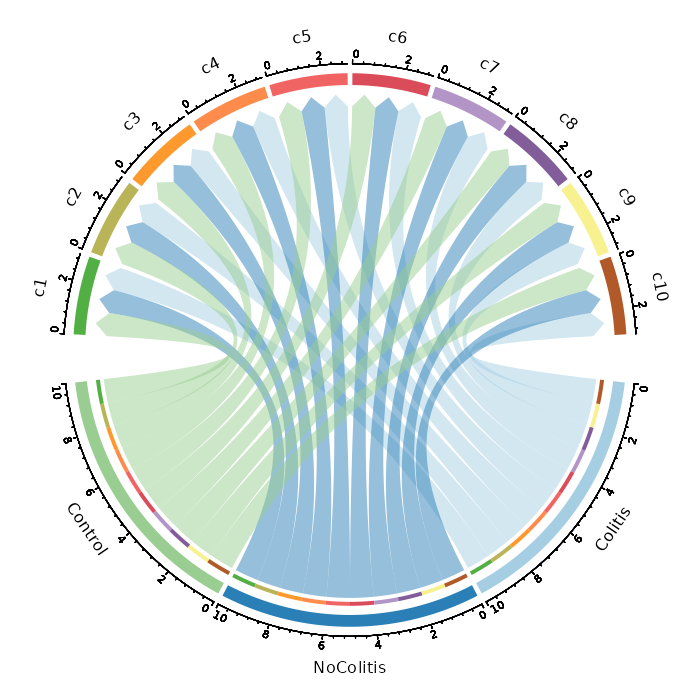

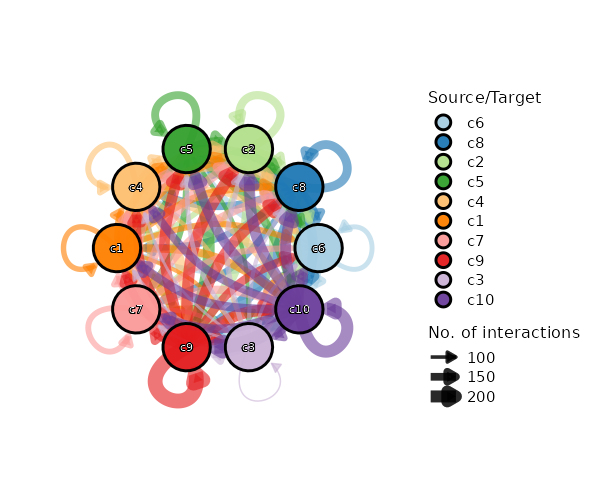

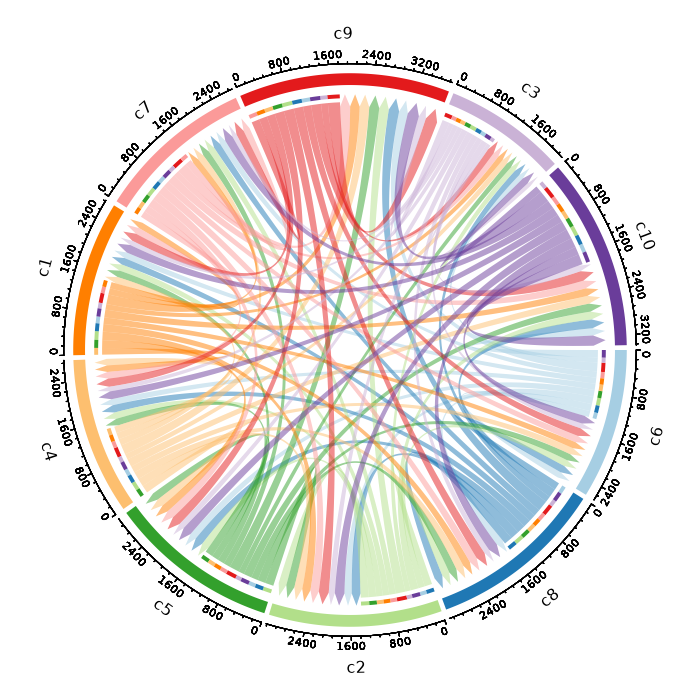

Number of cells in each cluster by Diagnosis (Circos Plot)

[SeuratClusterStats.envs.stats."Number of cells in each cluster by Diagnosis (Circos Plot)"]

plot_type = "circos"

group_by = "Diagnosis"

{: width="80%" }

{: width="80%" }

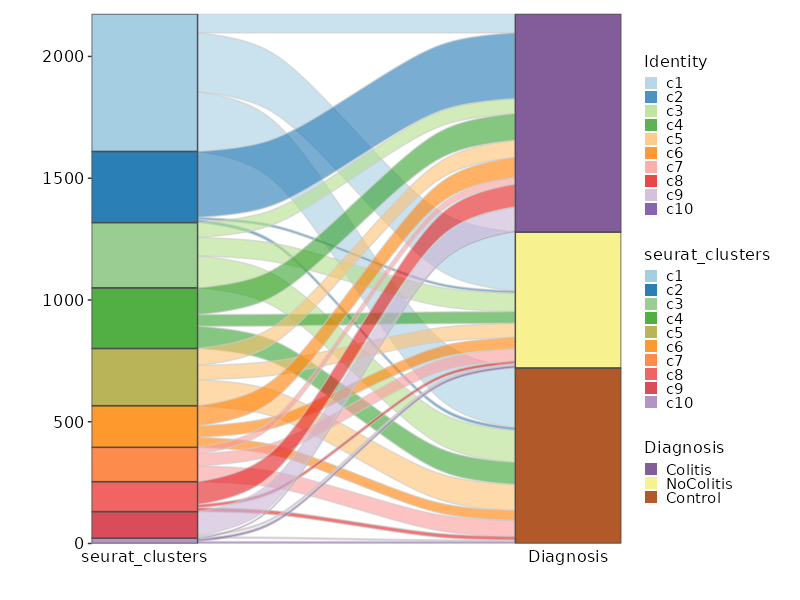

Number of cells in each cluster by Diagnosis (Sankey Plot)

[SeuratClusterStats.envs.stats."Number of cells in each cluster by Diagnosis (Sankey Plot)"]

plot_type = "sankey"

group_by = ["seurat_clusters", "Diagnosis"]

links_alpha = 0.6

devpars = {width = 800}

{: width="80%" }

{: width="80%" }

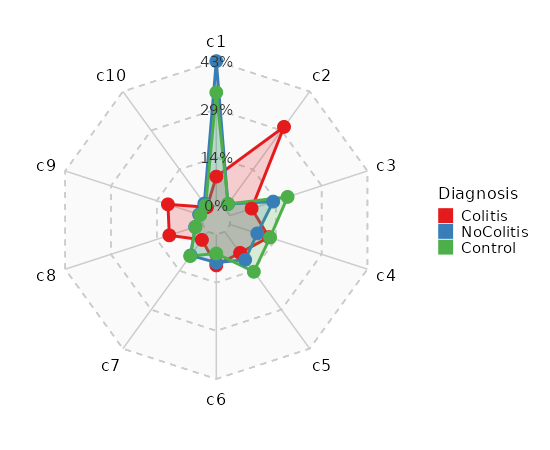

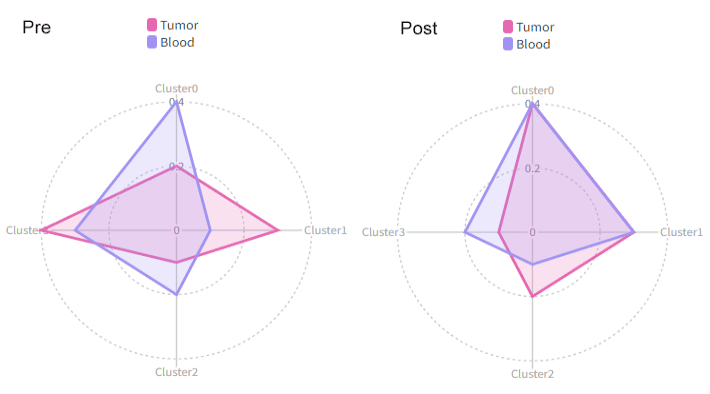

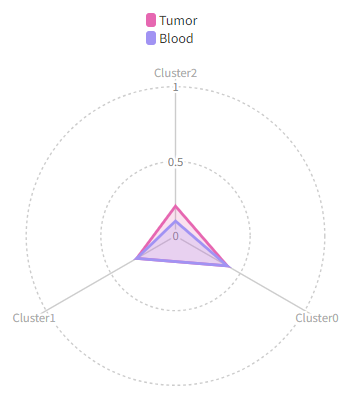

Number of cells in each cluster by Sample (Spider Plot)

[SeuratClusterStats.envs.stats."Number of cells in each cluster by Sample (Spider Plot)"]

plot_type = "spider"

group_by = "Diagnosis"

palette = "Set1"

{: width="80%" }

{: width="80%" }

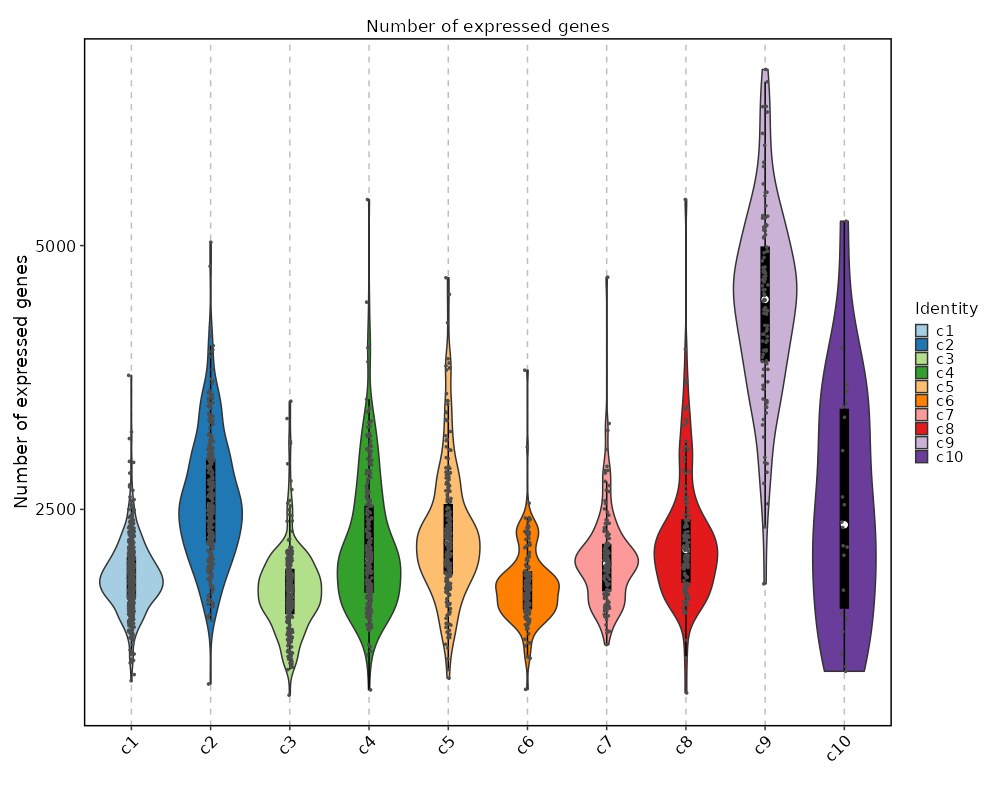

Number of genes detected in each cluster

[SeuratClusterStats.envs.ngenes."Number of genes detected in each cluster"]

plot_type = "violin"

add_box = true

add_point = true

{: width="80%" }

{: width="80%" }

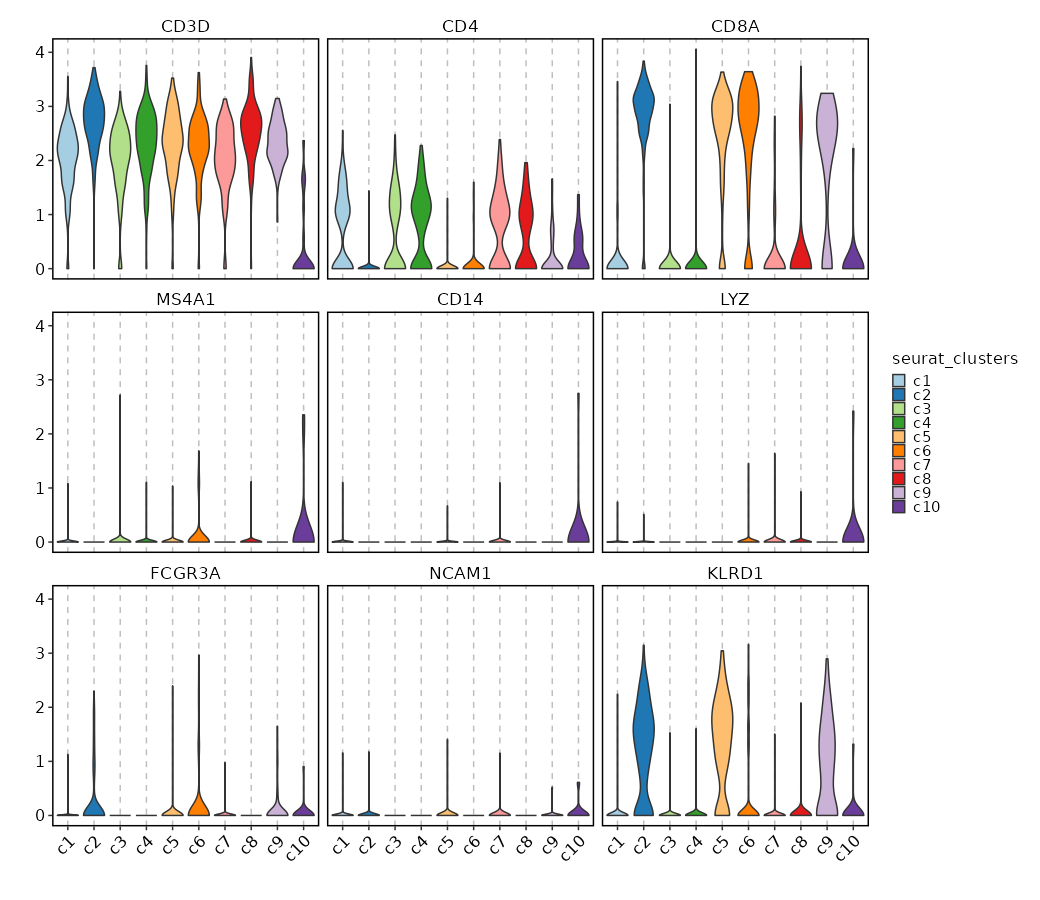

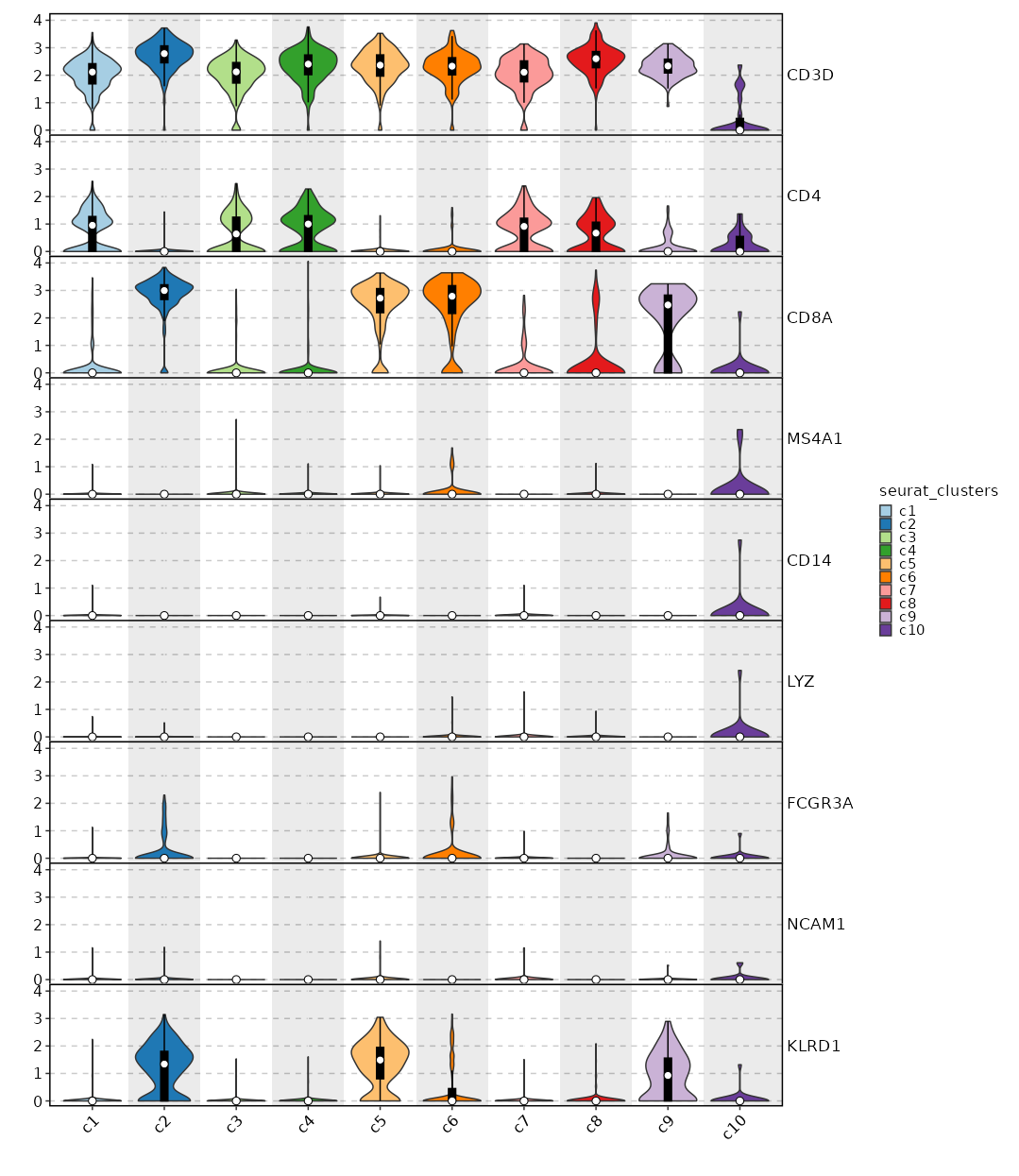

Feature Expression in Clusters (Violin Plots)

[SeuratClusterStats.envs.features_defaults]

features = ["CD3D", "CD4", "CD8A", "MS4A1", "CD14", "LYZ", "FCGR3A", "NCAM1", "KLRD1"]

[SeuratClusterStats.envs.features."Feature Expression in Clusters (Violin Plots)"]

plot_type = "violin"

ident = "seurat_clusters"

{: width="80%" }

{: width="80%" }

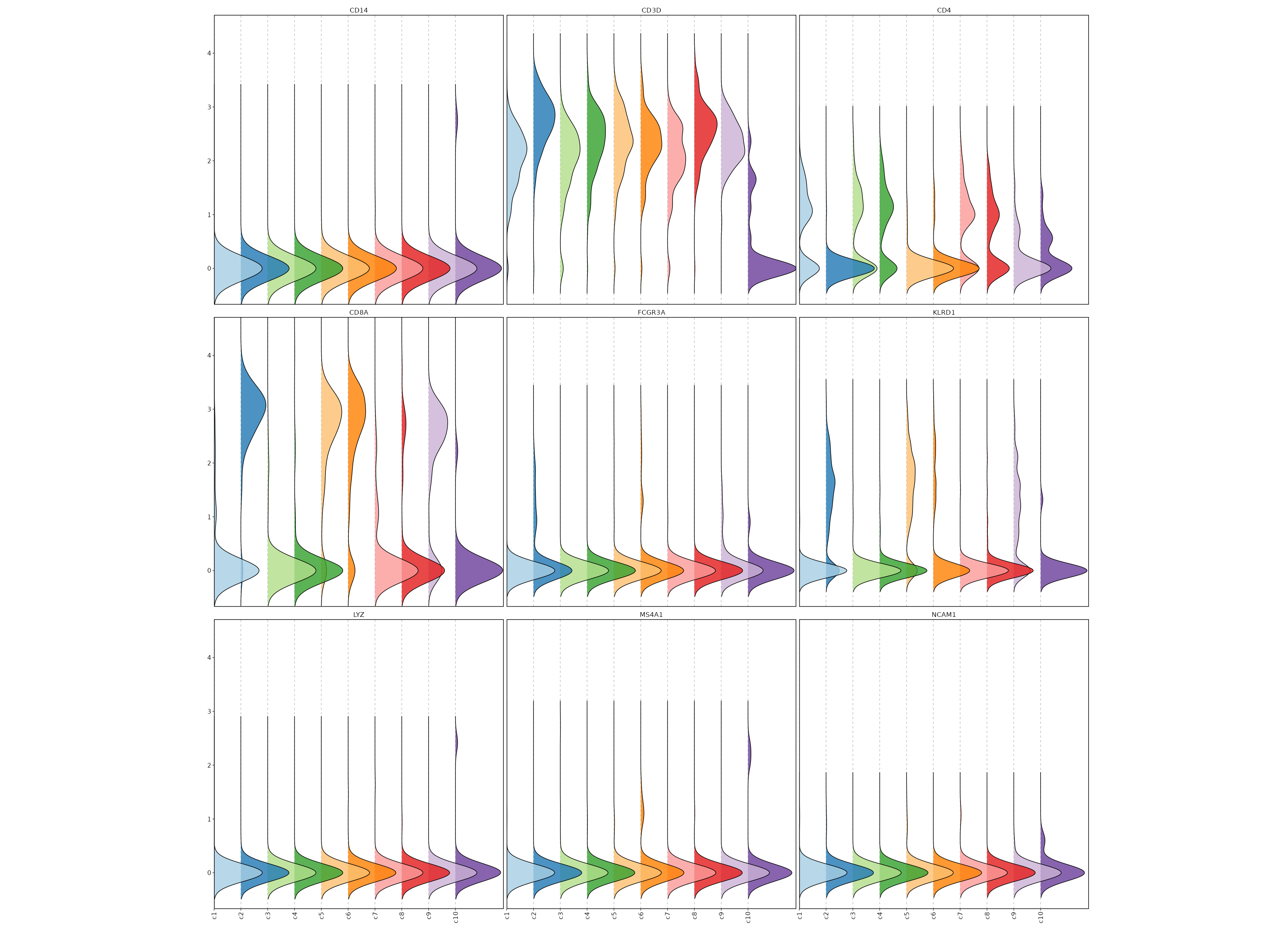

Feature Expression in Clusters (Ridge Plots)

# Using the same features as above

[SeuratClusterStats.envs.features."Feature Expression in Clusters (Ridge Plots)"]

plot_type = "ridge"

ident = "seurat_clusters"

flip = true

{: width="80%" }

{: width="80%" }

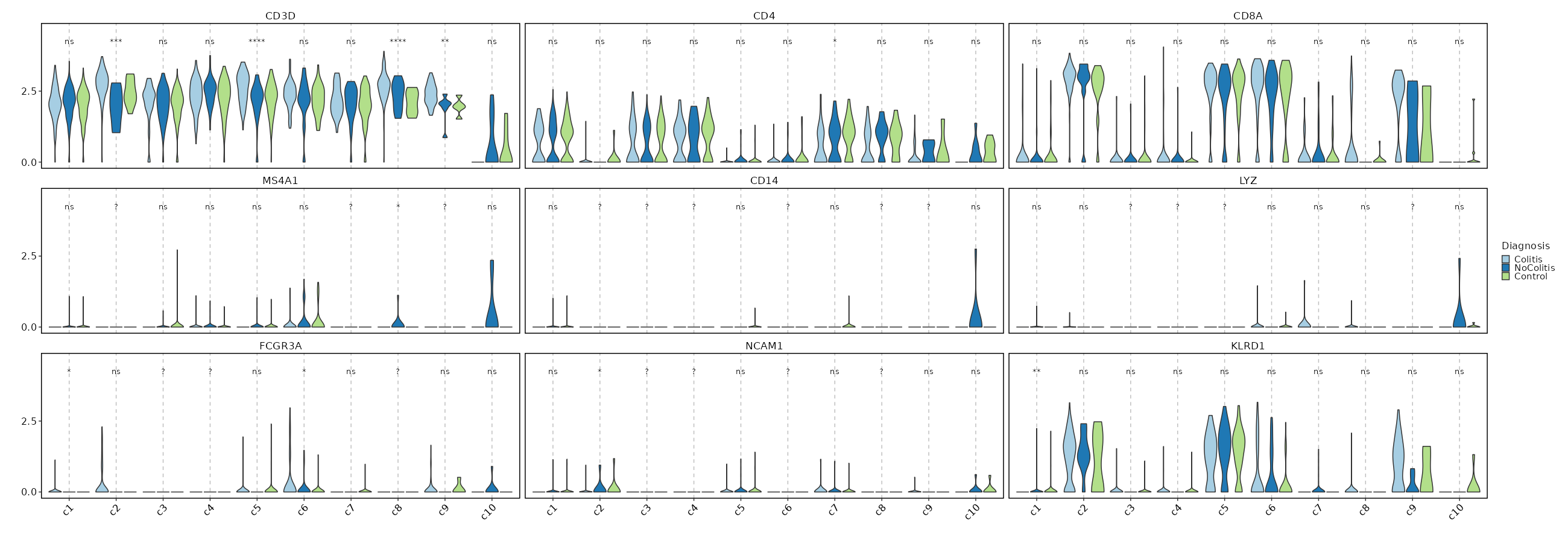

Feature Expression in Clusters by Diagnosis

# Using the same features as above

[SeuratClusterStats.envs.features."Feature Expression in Clusters by Diagnosis"]

plot_type = "violin"

group_by = "Diagnosis"

ident = "seurat_clusters"

comparisons = true

sig_label = "p.signif"

{: width="80%" }

{: width="80%" }

Feature Expression in Clusters (stacked)

# Using the same features as above

[SeuratClusterStats.envs.features."Feature Expression in Clusters (stacked)"]

plot_type = "violin"

ident = "seurat_clusters"

add_bg = true

stack = true

add_box = true

{: width="80%" }

{: width="80%" }

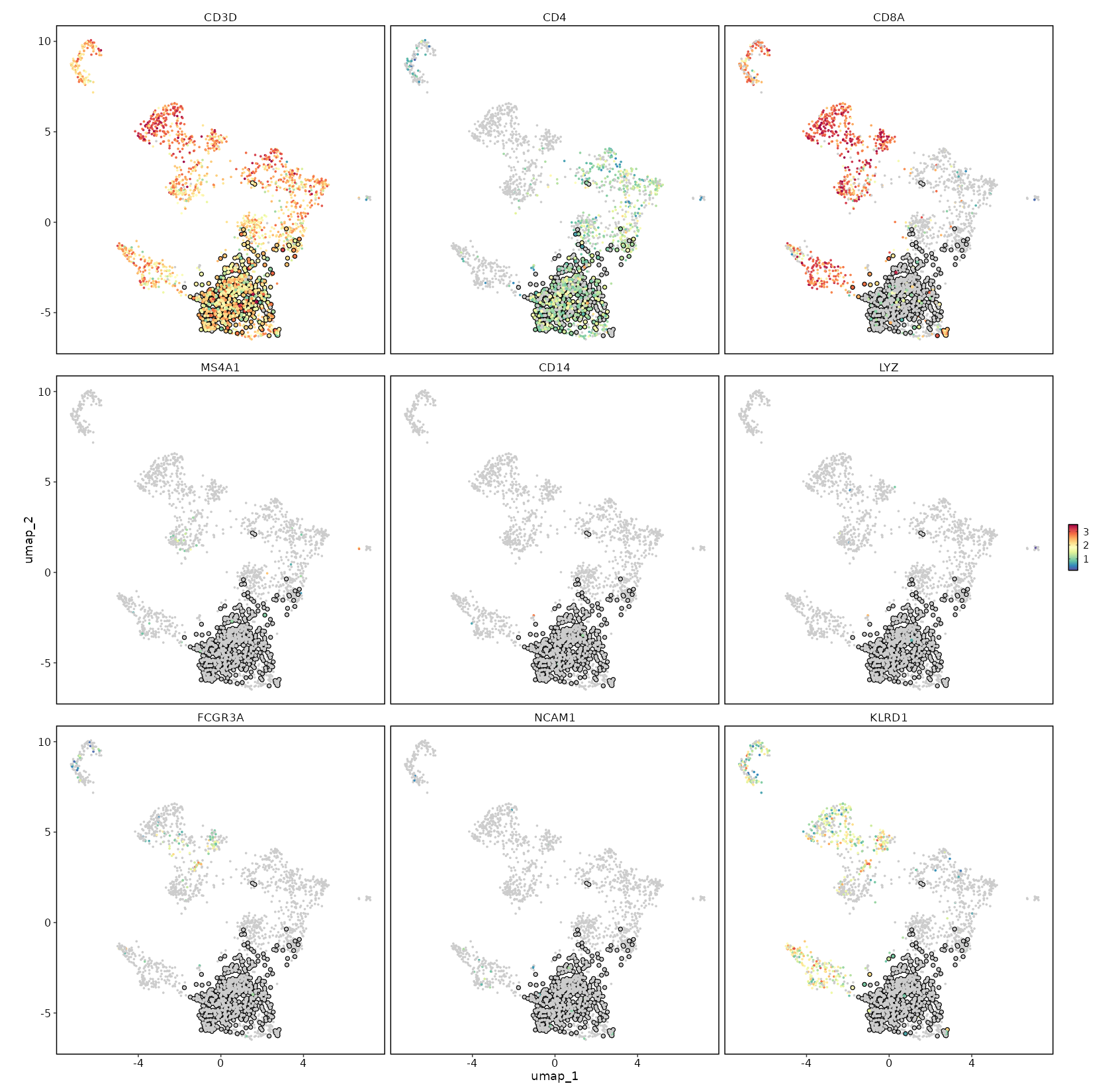

CD4 Expression on UMAP

[SeuratClusterStats.envs.features."CD4 Expression on UMAP"]

plot_type = "dim"

feature = "CD4"

highlight = "seurat_clusters == 'c1'"

{: width="80%" }

{: width="80%" }

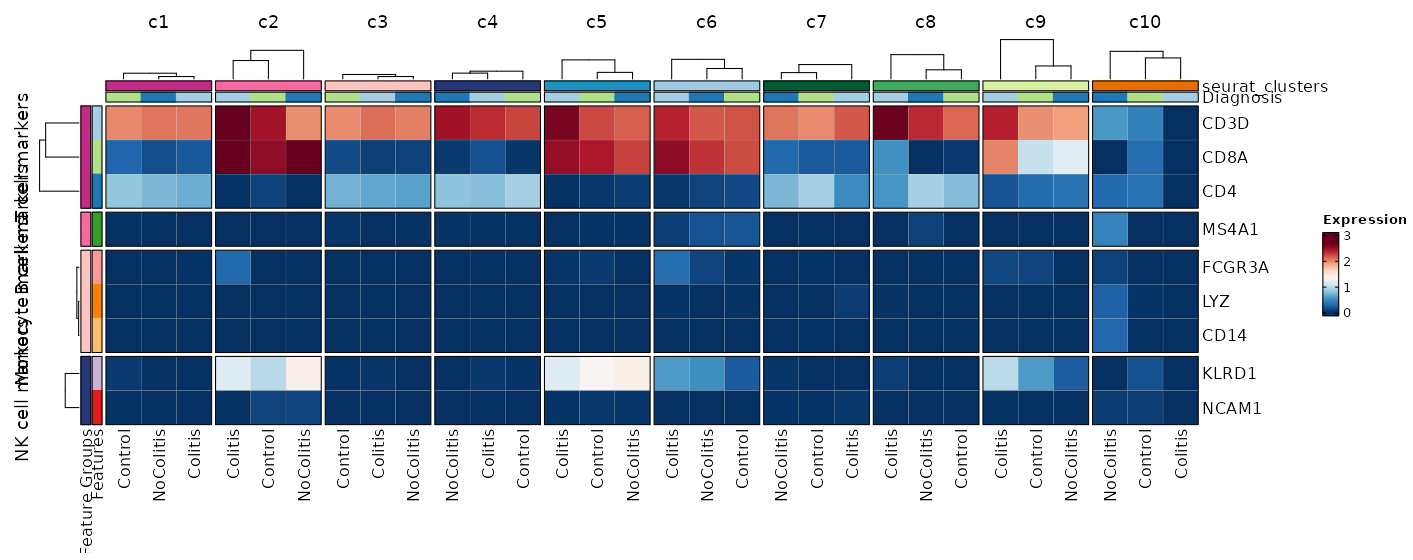

Feature Expression in Clusters by Diagnosis (Heatmap)

[SeuratClusterStats.envs.features."Feature Expression in Clusters by Diagnosis (Heatmap)"]

# Grouped features

features = {"T cell markers" = ["CD3D", "CD4", "CD8A"], "B cell markers" = ["MS4A1"], "Monocyte markers" = ["CD14", "LYZ", "FCGR3A"], "NK cell markers" = ["NCAM1", "KLRD1"]}

plot_type = "heatmap"

ident = "Diagnosis"

columns_split_by = "seurat_clusters"

name = "Expression"

devpars = {height = 560}

{: width="80%" }

{: width="80%" }

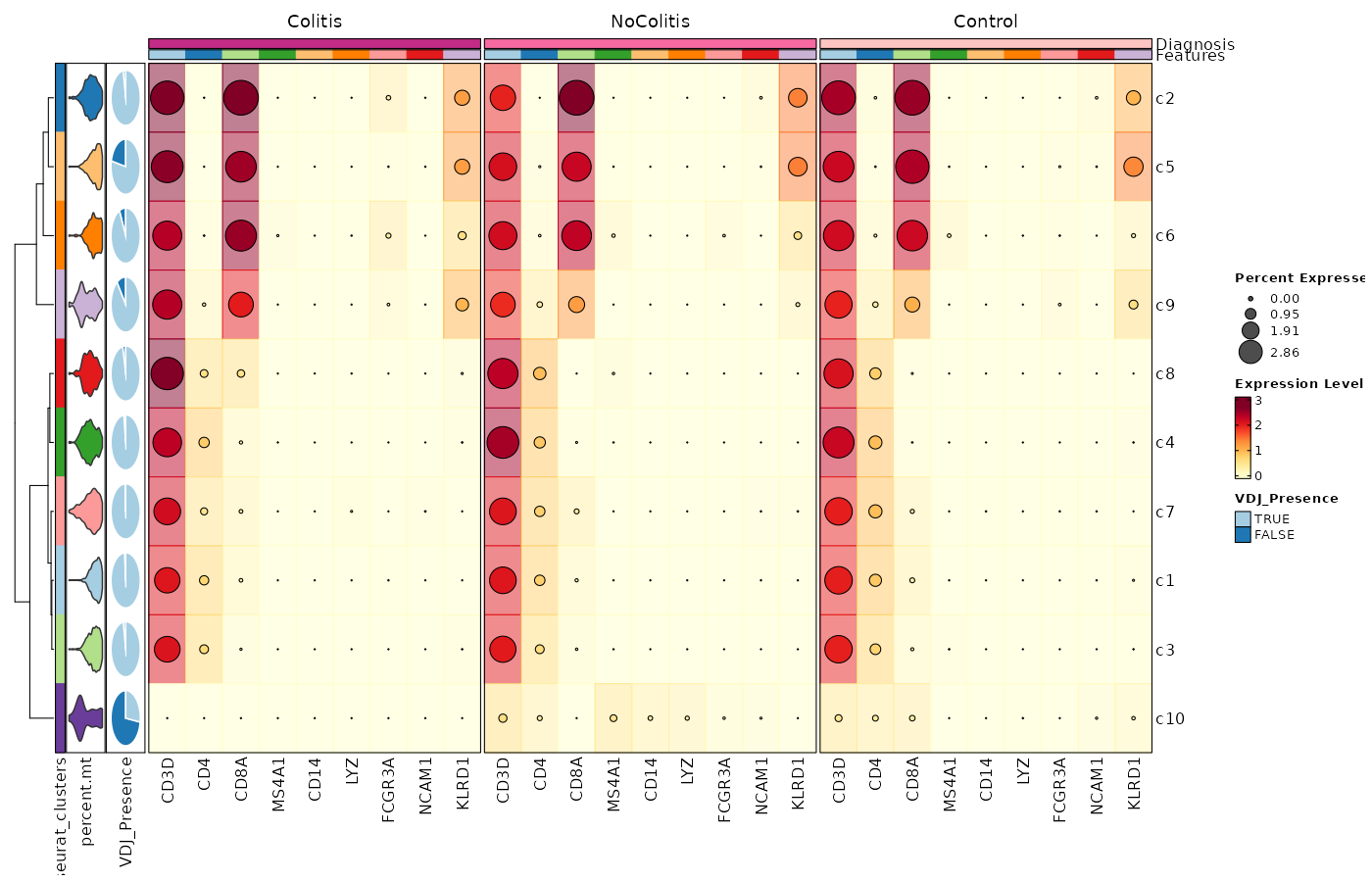

Feature Expression in Clusters by Diagnosis (Heatmap with annotations)

# Using the default features

[SeuratClusterStats.envs.features."Feature Expression in Clusters by Diagnosis (Heatmap with annotations)"]

ident = "seurat_clusters"

cell_type = "dot"

plot_type = "heatmap"

name = "Expression Level"

dot_size = "nanmean"

dot_size_name = "Percent Expressed"

add_bg = true

rows_split_by = "Diagnosis"

cluster_rows = false

flip = true

palette = "YlOrRd"

column_annotation = ["percent.mt", "VDJ_Presence"]

column_annotation_type = {"percent.mt" = "violin", VDJ_Presence = "pie"}

column_annotation_params = {"percent.mt" = {show_legend = false}}

devpars = {width = 1400, height = 900}

{: width="80%" }

{: width="80%" }

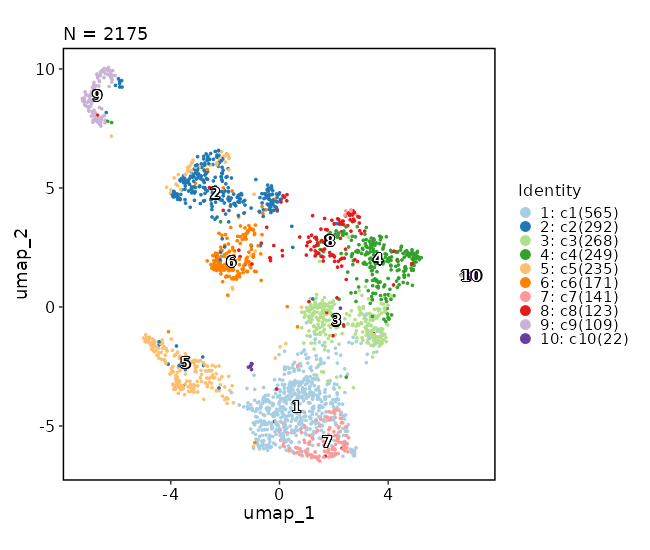

Dimensional reduction plot

[SeuratClusterStats.envs.features."Dimensional reduction plot"]

label = true

{: width="80%" }

{: width="80%" }

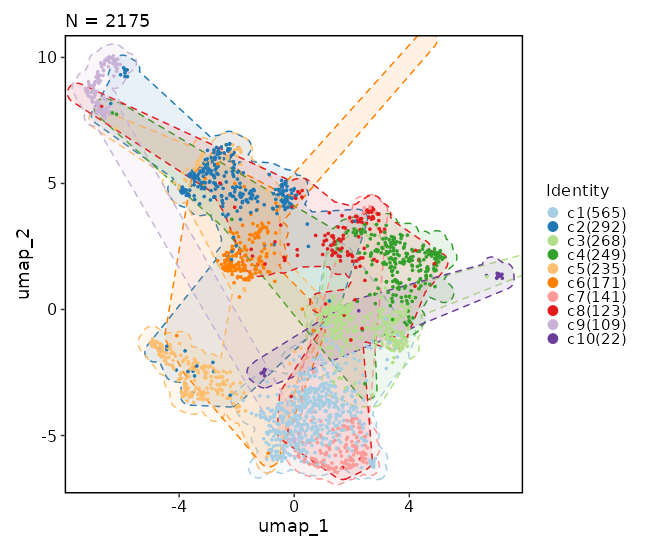

Dimensional reduction plot (with marks)

[SeuratClusterStats.envs.dimplots."Dimensional reduction plot (with marks)"]

add_mark = true

mark_linetype = 2

{: width="80%" }

{: width="80%" }

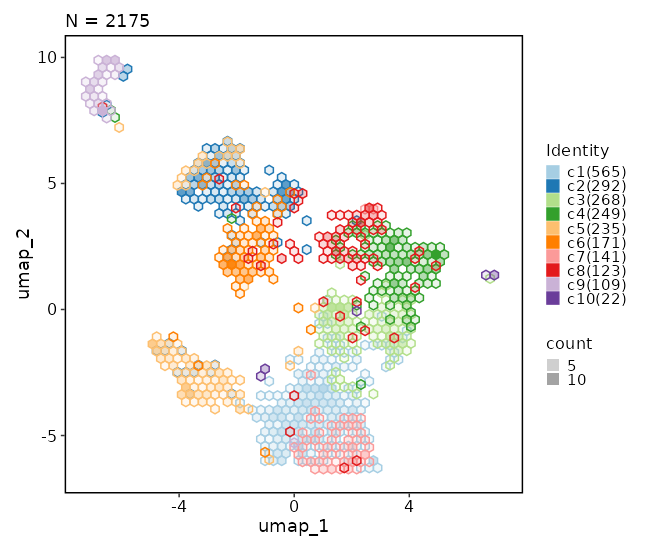

Dimensional reduction plot (with hex bins)

[SeuratClusterStats.envs.dimplots."Dimensional reduction plot (with hex bins)"]

hex = true

hex_bins = 50

{: width="80%" }

{: width="80%" }

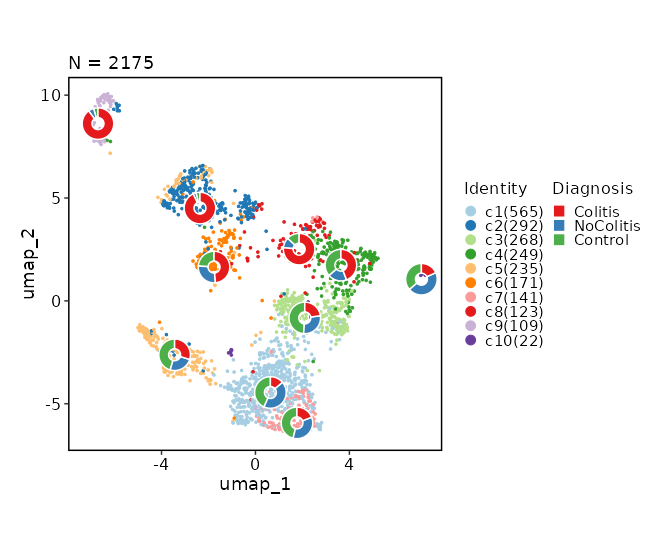

Dimensional reduction plot (with Diagnosis stats)

[SeuratClusterStats.envs.dimplots."Dimensional reduction plot (with Diagnosis stats)"]

stat_by = "Diagnosis"

stat_plot_type = "ring"

stat_plot_size = 0.15

{: width="80%" }

{: width="80%" }

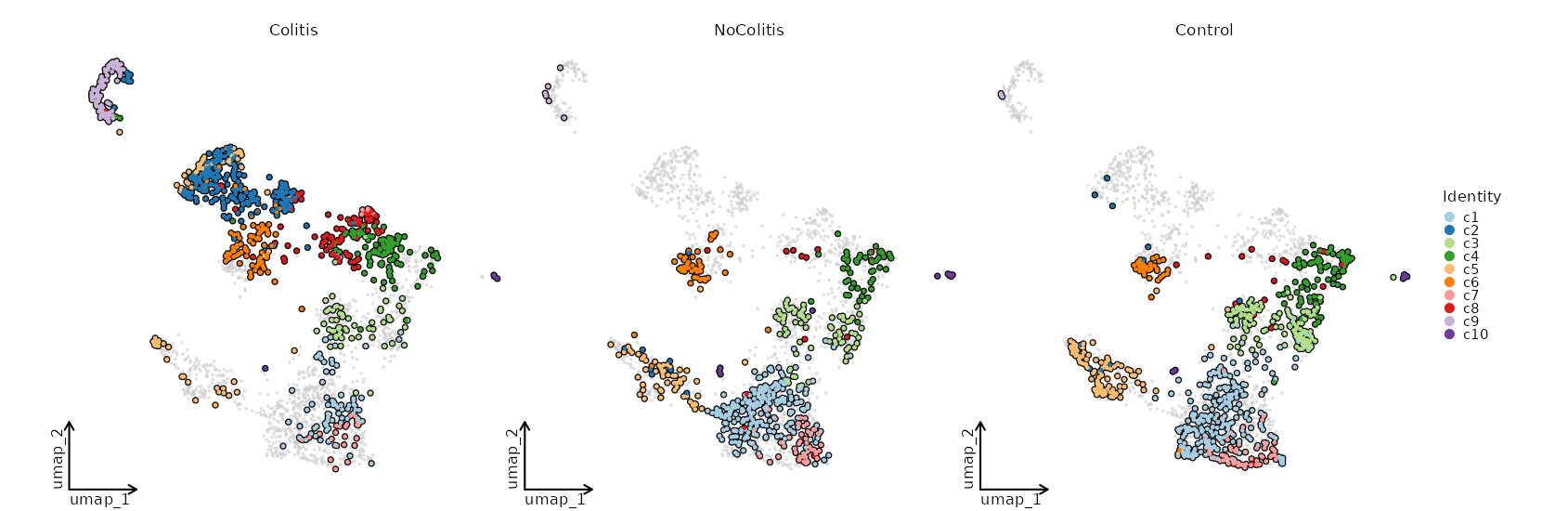

Dimensional reduction plot by Diagnosis

[SeuratClusterStats.envs.dimplots."Dimensional reduction plot by Diagnosis"]

facet_by = "Diagnosis"

highlight = true

theme = "theme_blank"

{: width="80%" }

{: width="80%" }

srtobj— The seurat object loaded bySeuratClustering

outdir— The output directory.Different types of plots will be saved in different subdirectories. For example,clustreeplots will be saved inclustreessubdirectory. For each case inenvs.clustrees, both the png and pdf files will be saved.

cache(type=auto) — Whether to cache the plots.Currently only plots for features are supported, since creating the those plots can be time consuming. IfTrue, the plots will be cached in the job output directory, which will be not cleaned up when job is rerunning.clustrees(type=json) — The cases for clustree plots.Keys are the names of the plots and values are the dicts inherited fromenv.clustrees_defaultsexceptprefix. There is no default case forclustrees.clustrees_defaults(ns) — The parameters for the clustree plots.- - devpars (ns): The device parameters for the clustree plot.

- res (type=int): The resolution of the plots.

- height (type=int): The height of the plots.

- width (type=int): The width of the plots. - - more_formats (type=list): The formats to save the plots other than

png. - - save_code (flag): Whether to save the code to reproduce the plot.

- - prefix (type=auto): string indicating columns containing clustering information.

The trailing dot is not necessary and will be added automatically.

WhenTRUE, clustrees will be plotted when there isFindClustersor

FindClusters.*in theobj@commands.

The latter is generated bySeuratSubClustering.

This will be ignored whenenvs.clustreesis specified

(the prefix of each case must be specified separately). - -

: Other arguments passed to scplotter::ClustreePlot.

See https://pwwang.github.io/scplotter/reference/ClustreePlot.html

- - devpars (ns): The device parameters for the clustree plot.

dimplots(type=json) — The dimensional reduction plots.Keys are the titles of the plots and values are the dicts inherited fromenv.dimplots_defaults. It can also have other parameters fromscplotter::CellDimPlot.dimplots_defaults(ns) — The default parameters fordimplots.- - group_by: The identity to use.

If it is from subclustering (reductionsub_umap_<ident>exists), this reduction will be used ifreduction

is set todimorauto. - - split_by: The column name in metadata to split the cells into different plots.

- - subset: An expression to subset the cells, will be passed to

tidyrseurat::filter(). - - devpars (ns): The device parameters for the plots.

- res (type=int): The resolution of the plots.

- height (type=int): The height of the plots.

- width (type=int): The width of the plots. - - reduction (choice): Which dimensionality reduction to use.

- dim: UseSeurat::DimPlot.

First searches forumap, thentsne, thenpca.

Ifidentis from subclustering,sub_umap_<ident>will be used.

- auto: Same asdim

- umap: UseSeurat::UMAPPlot.

- tsne: UseSeurat::TSNEPlot.

- pca: UseSeurat::PCAPlot. - -

: See https://pwwang.github.io/scplotter/reference/CellDimPlot.html

- - group_by: The identity to use.

features(type=json) — The plots for features, include gene expressions, and columns from metadata.Keys are the titles of the cases and values are the dicts inherited fromenv.features_defaults.features_defaults(ns) — The default parameters forfeatures.- - features (type=auto): The features to plot.

It can be either a string with comma separated features, a list of features, a file path withfile://prefix with features

(one per line), or an integer to use the top N features fromVariantFeatures(srtobj).

It can also be a dict with the keys as the feature group names and the values as the features, which

is used for heatmap to group the features. - - order_by (type=auto): The order of the clusters to show on the plot.

An expression passed todplyr::arrange()on the grouped meta data frame (byident).

For example, you can order the clusters by the activation score of

the cluster:desc(mean(ActivationScore, na.rm = TRUE)), suppose you have a column

ActivationScorein the metadata.

You may also specify the literal order of the clusters by a list of strings (at least two). - - subset: An expression to subset the cells, will be passed to

tidyrseurat::filter(). - - devpars (ns): The device parameters for the plots.

- res (type=int): The resolution of the plots.

- height (type=int): The height of the plots.

- width (type=int): The width of the plots. - - descr: The description of the plot, showing in the report.

- - more_formats (type=list): The formats to save the plots other than

png. - - save_code (flag): Whether to save the code to reproduce the plot.

- - save_data (flag): Whether to save the data used to generate the plot.

- -

: Other arguments passed to scplotter::FeatureStatPlot.

See https://pwwang.github.io/scplotter/reference/FeatureStatPlot.html

- - features (type=auto): The features to plot.

mutaters(type=json) — The mutaters to mutate the metadata to subset the cells.The mutaters will be applied in the order specified. You can also use the clone selectors to select the TCR clones/clusters. See https://pwwang.github.io/scplotter/reference/clone_selectors.html.ngenes(type=json) — The number of genes expressed in each cell.Keys are the names of the plots and values are the dicts inherited fromenv.ngenes_defaults.ngenes_defaults(ns) — The default parameters forngenes.The default parameters to plot the number of genes expressed in each cell.- - more_formats (type=list): The formats to save the plots other than

png. - - subset: An expression to subset the cells, will be passed to

tidyrseurat::filter(). - - devpars (ns): The device parameters for the plots.

- res (type=int): The resolution of the plots.

- height (type=int): The height of the plots.

- width (type=int): The width of the plots.

- - more_formats (type=list): The formats to save the plots other than

stats(type=json) — The number/fraction of cells to plot.Keys are the names of the plots and values are the dicts inherited fromenv.stats_defaults.stats_defaults(ns) — The default parameters forstats.This is to do some basic statistics on the clusters/cells. For more comprehensive analysis, see https://pwwang.github.io/scplotter/reference/CellStatPlot.html. The parameters from the cases can overwrite the default parameters.- - subset: An expression to subset the cells, will be passed to

tidyrseurat::filter(). - - devpars (ns): The device parameters for the clustree plot.

- res (type=int): The resolution of the plots.

- height (type=int): The height of the plots.

- width (type=int): The width of the plots. - - descr: The description of the plot, showing in the report.

- - more_formats (type=list): The formats to save the plots other than

png. - - save_code (flag): Whether to save the code to reproduce the plot.

- - save_data (flag): Whether to save the data used to generate the plot.

- -

: Other arguments passed to scplotter::CellStatPlot.

See https://pwwang.github.io/scplotter/reference/CellStatPlot.html.

- - subset: An expression to subset the cells, will be passed to

r-seurat—- check: {{proc.lang}} -e "library(Seurat)"

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>log(level,msg,*args,logger)— Log message for the process</>run()— Init all other properties and jobs</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously.

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

run()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

biopipen.ns.scrna.ModuleScoreCalculator(*args, **kwds) → Proc

Calculate the module scores for each cell

The module scores are calculated by

Seurat::AddModuleScore()

or Seurat::CellCycleScoring()

for cell cycle scores.

The module scores are calculated as the average expression levels of each program on single cell level, subtracted by the aggregated expression of control feature sets. All analyzed features are binned based on averaged expression, and the control features are randomly selected from each bin.

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneously.The program entrance for some schedulers may take too much resources when submitting a job or checking the job status. So we may use a smaller number here to limit the simultaneous submissions.template— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

srtobj— The seurat object loaded bySeuratClustering

rdsfile— The seurat object with module scores added to the metadata.

defaults(ns) — The default parameters formodules.- - features: The features to calculate the scores. Multiple features

should be separated by comma.

You can also specifycc.genesorcc.genes.updated.2019to

use the cell cycle genes to calculate cell cycle scores.

If so, three columns will be added to the metadata, including

S.Score,G2M.ScoreandPhase.

Only one type of cell cycle scores can be calculated at a time. - - nbin (type=int): Number of bins of aggregate expression levels

for all analyzed features. - - ctrl (type=int): Number of control features selected from

the same bin per analyzed feature. - - k (flag): Use feature clusters returned from

DoKMeans. - - assay: The assay to use.

- - seed (type=int): Set a random seed.

- - search (flag): Search for symbol synonyms for features in

features that don't match features in object? - - keep (flag): Keep the scores for each feature?

Only works for non-cell cycle scores. - - agg (choice): The aggregation function to use.

Only works for non-cell cycle scores.

- mean: The mean of the expression levels

- median: The median of the expression levels

- sum: The sum of the expression levels

- max: The max of the expression levels

- min: The min of the expression levels

- var: The variance of the expression levels

- sd: The standard deviation of the expression levels

- - features: The features to calculate the scores. Multiple features

modules(type=json) — The modules to calculate the scores.Keys are the names of the expression programs and values are the dicts inherited fromenv.defaults. Here are some examples -

For{ "CellCycle": {"features": "cc.genes.updated.2019"}, "Exhaustion": {"features": "HAVCR2,ENTPD1,LAYN,LAG3"}, "Activation": {"features": "IFNG"}, "Proliferation": {"features": "STMN1,TUBB"} }

CellCycle, the columnsS.Score,G2M.ScoreandPhasewill be added to the metadata.S.ScoreandG2M.Scoreare the cell cycle scores for each cell, andPhaseis the cell cycle phase for each cell.

You can also add Diffusion Components (DC) to the modules

{"DC": {"features": 2, "kind": "diffmap"}} will perform diffusion map as a reduction and add the first 2 components as

DC_1andDC_2to the metadata.diffmapis a shortcut fordiffusion_map. Other key-value pairs will pass todestiny::DiffusionMap(). You can later plot the diffusion map by usingreduction = "DC"inenv.dimplotsinSeuratClusterStats. This requiresSingleCellExperimentanddestinyR packages.

post_mutaters(type=json) — The mutaters to mutate the metadata aftercalculating the module scores. The mutaters will be applied in the order specified. This is useful when you want to create new scores based on the calculated module scores.

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>log(level,msg,*args,logger)— Log message for the process</>run()— Init all other properties and jobs</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously.

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

run()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

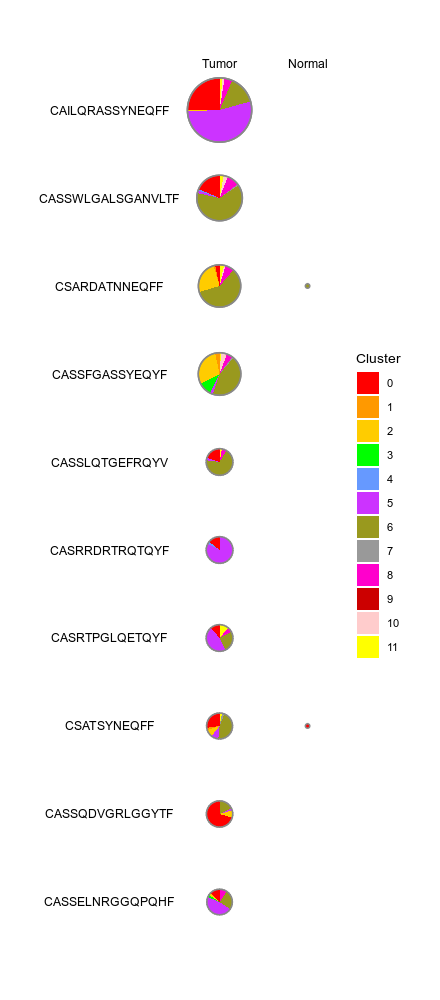

biopipen.ns.scrna.CellsDistribution(*args, **kwds) → Proc

Distribution of cells (i.e. in a TCR clone) from different groupsfor each cluster

This generates a set of pie charts with proportion of cells in each cluster Rows are the cells identities (i.e. TCR clones or TCR clusters), columns are groups (i.e. clinic groups).

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself