biopipen.ns.stats

Provides processes for statistics.

ChowTest(Proc) — Massive Chow tests.</>Mediation(Proc) — Mediation analysis.</>LiquidAssoc(Proc) — Liquid association tests.</>DiffCoexpr(Proc) — Differential co-expression analysis.</>MetaPvalue(Proc) — Calulation of meta p-values.</>MetaPvalue1(Proc) — Calulation of meta p-values.</>

biopipen.ns.stats.ChowTest(*args, **kwds) → Proc

Massive Chow tests.

See Also https://en.wikipedia.org/wiki/Chow_test

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneouslytemplate— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

fmlfile— The formula file. The first column is grouping and thesecond column is the formula. It must be tab-delimited.Group Formula ... # Other columns to be added to outfile G1 Fn ~ F1 + Fx + Fy # Fx, Fy could be covariates G1 Fn ~ F2 + Fx + Fy ... Gk Fn ~ F3 + Fx + Fygroupfile— The group file. The rows are the samples and the columnsare the groupings. It must be tab-delimited.Sample G1 G2 G3 ... Gk S1 0 1 0 0 S2 2 1 0 NA # exclude this sample ... Sm 1 0 0 0infile— The input data file. The rows are samples and the columns arefeatures. It must be tab-delimited.Sample F1 F2 F3 ... Fn S1 1.2 3.4 5.6 7.8 S2 2.3 4.5 6.7 8.9 ... Sm 5.6 7.8 9.0 1.2

outfile— The output file. It is a tab-delimited file with the firstcolumn as the grouping and the second column as the p-value.Group Formula ... Pooled Groups SSR SumSSR Fstat Pval Padj G1 Fn ~ F1 0.123 2 1 0.123 0.123 0.123 0.123 G1 Fn ~ F2 0.123 2 1 0.123 0.123 0.123 0.123 ... Gk Fn ~ F3 0.123 2 1 0.123 0.123 0.123 0.123

padj(choice) — The method for p-value adjustment.- - none: No p-value adjustment (no Padj column in outfile).

- - holm: Holm-Bonferroni method.

- - hochberg: Hochberg method.

- - hommel: Hommel method.

- - bonferroni: Bonferroni method.

- - BH: Benjamini-Hochberg method.

- - BY: Benjamini-Yekutieli method.

- - fdr: FDR correction method.

transpose_group(flag) — Whether to transpose the group file.transpose_input(flag) — Whether to transpose the input file.

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>init()— Init all other properties and jobs</>log(level,msg,*args,logger)— Log message for the process</>run()— Run the process</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

init()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

run()

Run the process

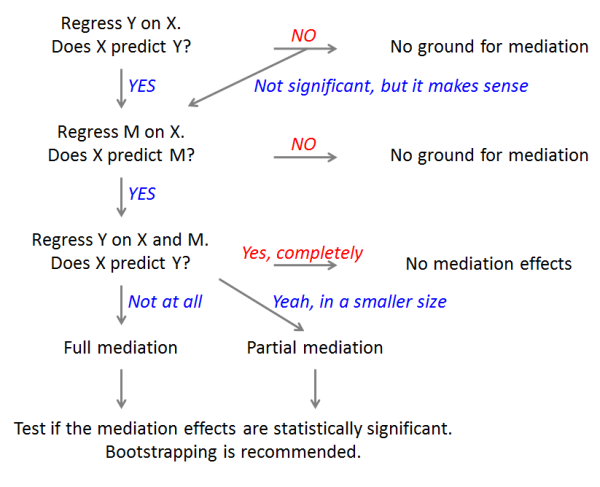

biopipen.ns.stats.Mediation(*args, **kwds) → Proc

Mediation analysis.

The flowchart of mediation analysis:

Reference: - https://library.virginia.edu/data/articles/introduction-to-mediation-analysis - https://en.wikipedia.org/wiki/Mediation_(statistics) - https://tilburgsciencehub.com/topics/analyze/regression/linear-regression/mediation-analysis/ - https://ademos.people.uic.edu/Chapter14.html

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneouslytemplate— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

fmlfile— The formula file.Where Y is the outcome variable, X is the predictor variable, M is the mediator variable, and Case is the case name. Model_M and Model_Y are the models for M and Y, respectively.Case M Y X Cov Model_M Model_Y Case1 F1 F2 F3 F4,F5 glm lm ...envs.caseswill be ignored if this is provided.infile— The input data file. The rows are samples and the columns arefeatures. It must be tab-delimited.Sample F1 F2 F3 ... Fn S1 1.2 3.4 5.6 7.8 S2 2.3 4.5 6.7 8.9 ... Sm 5.6 7.8 9.0 1.2

outfile— The output file.Columns to help understand the results: Total Effect: a total effect of X on Y (without M) (Y ~ X). ADE: A Direct Effect of X on Y after taking into account a mediation effect of M (Y ~ X + M). ACME: The Mediation Effect, the total effect minus the direct effect, which equals to a product of a coefficient of X in the second step and a coefficient of M in the last step. The goal of mediation analysis is to obtain this indirect effect and see if it's statistically significant.

args(ns) — Other arguments passed tomediation::mediatefunction.- -

: More arguments passed to mediation::mediatefunction.

See: https://rdrr.io/cran/mediation/man/mediate.html

- -

cases(type=json) — The cases for mediation analysis.Ignored ifin.fmlfileis provided. A json/dict with case names as keys and values as a dict of M, Y, X, Cov, Model_M, Model_Y. For example:{ "Case1": { "M": "F1", "Y": "F2", "X": "F3", "Cov": "F4,F5", "Model_M": "glm", "Model_Y": "lm" }, ... }ncores(type=int) — Number of cores to use for parallelization for cases.padj(choice) — The method for (ACME) p-value adjustment.- - none: No p-value adjustment (no Padj column in outfile).

- - holm: Holm-Bonferroni method.

- - hochberg: Hochberg method.

- - hommel: Hommel method.

- - bonferroni: Bonferroni method.

- - BH: Benjamini-Hochberg method.

- - BY: Benjamini-Yekutieli method.

- - fdr: FDR correction method.

sims(type=int) — Number of Monte Carlo draws for nonparametric bootstrap or quasi-Bayesian approximation.Will be passed tomediation::mediatefunction.transpose_input(flag) — Whether to transpose the input file.

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>init()— Init all other properties and jobs</>log(level,msg,*args,logger)— Log message for the process</>run()— Run the process</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

init()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

run()

Run the process

biopipen.ns.stats.LiquidAssoc(*args, **kwds) → Proc

Liquid association tests.

See Also https://github.com/gundt/fastLiquidAssociation Requires https://github.com/pwwang/fastLiquidAssociation

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneouslytemplate— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

covfile— The covariate file. The rows are the samples and the columnsare the covariates. It must be tab-delimited. If provided, the data inin.infilewill be adjusted by covariates by regressing out the covariates and the residuals will be used for liquid association tests.fmlfile— The formula file. The 3 columns are X3, X12 and X21. The resultswill be filtered based on the formula. It must be tab-delimited without header.groupfile— The group file. The rows are the samples and the columnsare the groupings. It must be tab-delimited.This will be served as the Z column in the result ofSample G1 G2 G3 ... Gk S1 0 1 0 0 S2 2 1 0 NA # exclude this sample ... Sm 1 0 0 0fastMLAThis can be omitted. If so,envs.nvecshould be specified, which is to select column fromin.infileas Z.infile— The input data file. The rows are samples and the columns arefeatures. It must be tab-delimited.The features (columns) will be tested pairwise, which will be the X and Y columns in the result ofSample F1 F2 F3 ... Fn S1 1.2 3.4 5.6 7.8 S2 2.3 4.5 6.7 8.9 ... Sm 5.6 7.8 9.0 1.2fastMLA

outfile— The output file.X12 X21 X3 rhodiff MLA value estimates san.se wald Pval model C38 C46 C5 0.87 0.32 0.67 0.20 10.87 0 F C46 C38 C5 0.87 0.32 0.67 0.20 10.87 0 F C27 C39 C4 0.94 0.34 1.22 0.38 10.03 0 F

cut(type=int) — Value passed to the GLA function to create buckets(equal to number of buckets+1). Values placing between 15-30 samples per bucket are optimal. Must be a positive integer>1. By default,max(ceiling(nrow(data)/22), 4)is used.ncores(type=int) — Number of cores to use for parallelization.nvec— The column index (1-based) of Z inin.infile, ifin.groupfileisomitted. You can specify multiple columns by comma-seperated values, or a range of columns by-. For example,1,3,5-7,9. It also supports column names. For example,F1,F3.-is not supported for column names.padj(choice) — The method for p-value adjustment.- - none: No p-value adjustment (no Padj column in outfile).

- - holm: Holm-Bonferroni method.

- - hochberg: Hochberg method.

- - hommel: Hommel method.

- - bonferroni: Bonferroni method.

- - BH: Benjamini-Hochberg method.

- - BY: Benjamini-Yekutieli method.

- - fdr: FDR correction method.

rvalue(type=float) — Tolerance value for LA approximation. Lower values ofrvalue will cause a more thorough search, but take longer.topn(type=int) — Number of results to return byfastMLA, ordered fromhighest|MLA|value descending. The default of the package is 2000, but here we set to 1e6 to return as many results as possible (also good to do pvalue adjustment).transpose_cov(flag) — Whether to transpose the covariate file.transpose_group(flag) — Whether to transpose the group file.transpose_input(flag) — Whether to transpose the input file.x— Similar asnvec, but limit X group to given features.The rest of features (other than X and Z) inin.infilewill be used as Y. The features inin.infilewill still be tested pairwise, but only features in X and Y will be kept.xyz_names— The names of X12, X21 and X3 in the final output file. Separatedby comma. For example,X12,X21,X3.

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>init()— Init all other properties and jobs</>log(level,msg,*args,logger)— Log message for the process</>run()— Run the process</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

init()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

run()

Run the process

biopipen.ns.stats.DiffCoexpr(*args, **kwds) → Proc

Differential co-expression analysis.

See also https://bmcbioinformatics.biomedcentral.com/articles/10.1186/1471-2105-11-497 and https://github.com/DavisLaboratory/dcanr/blob/8958d61788937eef3b7e2b4118651cbd7af7469d/R/inference_methods.R#L199.

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneouslytemplate— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

groupfile— The group file. The rows are the samples and the columnsare the groupings. It must be tab-delimited.Sample G1 G2 G3 ... Gk S1 0 1 0 0 S2 2 1 0 NA # exclude this sample ... Sm 1 0 0 0infile— The input data file. The rows are samples and the columns arefeatures. It must be tab-delimited.Sample F1 F2 F3 ... Fn S1 1.2 3.4 5.6 7.8 S2 2.3 4.5 6.7 8.9 ... Sm 5.6 7.8 9.0 1.2

outfile— The output file. It is a tab-delimited file with the firstcolumn as the feature pair and the second column as the p-value.Group Feature1 Feature2 Pval Padj G1 F1 F2 0.123 0.123 G1 F1 F3 0.123 0.123 ...

beta— The beta value for the differential co-expression analysis.method(choice) — The method used to calculate the differentialco-expression.- - pearson: Pearson correlation.

- - spearman: Spearman correlation.

ncores(type=int) — The number of cores to use for parallelizationpadj(choice) — The method for p-value adjustment.- - none: No p-value adjustment (no Padj column in outfile).

- - holm: Holm-Bonferroni method.

- - hochberg: Hochberg method.

- - hommel: Hommel method.

- - bonferroni: Bonferroni method.

- - BH: Benjamini-Hochberg method.

- - BY: Benjamini-Yekutieli method.

- - fdr: FDR correction method.

perm_batch(type=int) — The number of permutations to run in each batchseed(type=int) — The seed for random number generationtranspose_group(flag) — Whether to transpose the group file.transpose_input(flag) — Whether to transpose the input file.

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>init()— Init all other properties and jobs</>log(level,msg,*args,logger)— Log message for the process</>run()— Run the process</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

init()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

run()

Run the process

biopipen.ns.stats.MetaPvalue(*args, **kwds) → Proc

Calulation of meta p-values.

If there is only one input file, only the p-value adjustment will be performed.

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneouslytemplate— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

infiles— The input files. Each file is a tab-delimited file with multiplecolumns. There should be ID column(s) to match the rows in other files and p-value column(s) to be combined. The records will be full-joined by ID. When only one file is provided, only the pvalue adjustment will be performed whenenvs.padjis notnone, otherwise the input file will be copied toout.outfile.

outfile— The output file. It is a tab-delimited file with the first column asthe ID and the second column as the combined p-value.ID ID1 ... Pval Padj a x ... 0.123 0.123 b y ... 0.123 0.123 ...

id_cols— The column names used in allin.infilesas ID columns. Multiplecolumns can be specified by comma-seperated values. For example,ID1,ID2, whereID1is the ID column in the first file andID2is the ID column in the second file. Ifid_exprsis specified, this should be a single column name for the new ID column in eachin.infilesand the finalout.outfile.id_exprs— The R expressions for eachin.infilesto get ID column(s).keep_single(flag) — Whether to keep the original p-value when there is only onep-value.method(choice) — The method used to calculate the meta-pvalue.- - fisher: Fisher's method.

- - sumlog: Sum of logarithms (same as Fisher's method)

- - logitp: Logit method.

- - sumz: Sum of z method (Stouffer's method).

- - meanz: Mean of z method.

- - meanp: Mean of p method.

- - invt: Inverse t method.

- - sump: Sum of p method (Edgington's method).

- - votep: Vote counting method.

- - wilkinsonp: Wilkinson's method.

- - invchisq: Inverse chi-square method.

na— The method to handle NA values. -1 to skip the record. Otherwise NAwill be replaced by the given value.padj(choice) — The method for p-value adjustment.- - none: No p-value adjustment (no Padj column in outfile).

- - holm: Holm-Bonferroni method.

- - hochberg: Hochberg method.

- - hommel: Hommel method.

- - bonferroni: Bonferroni method.

- - BH: Benjamini-Hochberg method.

- - BY: Benjamini-Yekutieli method.

- - fdr: FDR correction method.

pval_cols— The column names used in allin.infilesas p-value columns.Different columns can be specified by comma-seperated values for eachin.infiles. For example,Pval1,Pval2.

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>init()— Init all other properties and jobs</>log(level,msg,*args,logger)— Log message for the process</>run()— Run the process</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

init()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

run()

Run the process

biopipen.ns.stats.MetaPvalue1(*args, **kwds) → Proc

Calulation of meta p-values.

Unlike MetaPvalue, this process only accepts one input file.

The p-values will be grouped by the ID columns and combined by the selected method.

cache— Should we detect whether the jobs are cached?desc— The description of the process. Will use the summary fromthe docstring by default.dirsig— When checking the signature for caching, whether should we walkthrough the content of the directory? This is sometimes time-consuming if the directory is big.envs— The arguments that are job-independent, useful for common optionsacross jobs.envs_depth— How deep to update the envs when subclassed.error_strategy— How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

export— When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processesforks— How many jobs to run simultaneously?input— The keys for the input channelinput_data— The input data (will be computed for dependent processes)lang— The language for the script to run. Should be the path to theinterpreter iflangis not in$PATH.name— The name of the process. Will use the class name by default.nexts— Computed fromrequiresto build the process relationshipsnum_retries— How many times to retry to jobs once error occursorder— The execution order for this process. The bigger the numberis, the later the process will be executed. Default: 0. Note that the dependent processes will always be executed first. This doesn't work for start processes either, whose orders are determined byPipen.set_starts()output— The output keys for the output channel(the data will be computed)output_data— The output data (to pass to the next processes)plugin_opts— Options for process-level pluginsrequires— The dependency processesscheduler— The scheduler to run the jobsscheduler_opts— The options for the schedulerscript— The script template for the processsubmission_batch— How many jobs to be submited simultaneouslytemplate— Define the template engine to use.This could be either a template engine or a dict with keyengineindicating the template engine and the rest the arguments passed to the constructor of thepipen.template.Templateobject. The template engine could be either the name of the engine, currently jinja2 and liquidpy are supported, or a subclass ofpipen.template.Template. You can subclasspipen.template.Templateto use your own template engine.

infile— The input file.The file is a tab-delimited file with multiple columns. There should be ID column(s) to group the rows where p-value column(s) to be combined.

outfile— The output file. It is a tab-delimited file with the first column asthe ID and the second column as the combined p-value.ID ID1 ... Pval Padj a x ... 0.123 0.123 b y ... 0.123 0.123 ...

id_cols— The column names used inin.infileas ID columns. Multiplecolumns can be specified by comma-seperated values. For example,ID1,ID2.keep_single(flag) — Whether to keep the original p-value when there is only onep-value.method(choice) — The method used to calculate the meta-pvalue.- - fisher: Fisher's method.

- - sumlog: Sum of logarithms (same as Fisher's method)

- - logitp: Logit method.

- - sumz: Sum of z method (Stouffer's method).

- - meanz: Mean of z method.

- - meanp: Mean of p method.

- - invt: Inverse t method.

- - sump: Sum of p method (Edgington's method).

- - votep: Vote counting method.

- - wilkinsonp: Wilkinson's method.

- - invchisq: Inverse chi-square method.

na— The method to handle NA values. -1 to skip the record. Otherwise NAwill be replaced by the given value.padj(choice) — The method for p-value adjustment.- - none: No p-value adjustment (no Padj column in outfile).

- - holm: Holm-Bonferroni method.

- - hochberg: Hochberg method.

- - hommel: Hommel method.

- - bonferroni: Bonferroni method.

- - BH: Benjamini-Hochberg method.

- - BY: Benjamini-Yekutieli method.

- - fdr: FDR correction method.

pval_col— The column name used inin.infileas p-value column.

__init_subclass__()— Do the requirements inferring since we need them to build up theprocess relationship </>from_proc(proc,name,desc,envs,envs_depth,cache,export,error_strategy,num_retries,forks,input_data,order,plugin_opts,requires,scheduler,scheduler_opts,submission_batch)(Type) — Create a subclass of Proc using another Proc subclass or Proc itself</>gc()— GC process for the process to save memory after it's done</>init()— Init all other properties and jobs</>log(level,msg,*args,logger)— Log message for the process</>run()— Run the process</>

pipen.proc.ProcMeta(name, bases, namespace, **kwargs)

Meta class for Proc

__call__(cls,*args,**kwds)(Proc) — Make sure Proc subclasses are singletons</>__instancecheck__(cls,instance)— Override for isinstance(instance, cls).</>__repr__(cls)(str) — Representation for the Proc subclasses</>__subclasscheck__(cls,subclass)— Override for issubclass(subclass, cls).</>register(cls,subclass)— Register a virtual subclass of an ABC.</>

register(cls, subclass)Register a virtual subclass of an ABC.

Returns the subclass, to allow usage as a class decorator.

__instancecheck__(cls, instance)Override for isinstance(instance, cls).

__subclasscheck__(cls, subclass)Override for issubclass(subclass, cls).

__repr__(cls) → strRepresentation for the Proc subclasses

__call__(cls, *args, **kwds)Make sure Proc subclasses are singletons

*args(Any) — and**kwds(Any) — Arguments for the constructor

The Proc instance

from_proc(proc, name=None, desc=None, envs=None, envs_depth=None, cache=None, export=None, error_strategy=None, num_retries=None, forks=None, input_data=None, order=None, plugin_opts=None, requires=None, scheduler=None, scheduler_opts=None, submission_batch=None)

Create a subclass of Proc using another Proc subclass or Proc itself

proc(Type) — The Proc subclassname(str, optional) — The new name of the processdesc(str, optional) — The new description of the processenvs(Mapping, optional) — The arguments of the process, will overwrite parent oneThe items that are specified will be inheritedenvs_depth(int, optional) — How deep to update the envs when subclassed.cache(bool, optional) — Whether we should check the cache for the jobsexport(bool, optional) — When True, the results will be exported to<pipeline.outdir>Defaults to None, meaning only end processes will export. You can set it to True/False to enable or disable exporting for processeserror_strategy(str, optional) — How to deal with the errors- - retry, ignore, halt

- - halt to halt the whole pipeline, no submitting new jobs

- - terminate to just terminate the job itself

num_retries(int, optional) — How many times to retry to jobs once error occursforks(int, optional) — New forks for the new processinput_data(Any, optional) — The input data for the process. Only when this processis a start processorder(int, optional) — The order to execute the new processplugin_opts(Mapping, optional) — The new plugin options, unspecified items will beinherited.requires(Sequence, optional) — The required processes for the new processscheduler(str, optional) — The new shedular to run the new processscheduler_opts(Mapping, optional) — The new scheduler options, unspecified items willbe inherited.submission_batch(int, optional) — How many jobs to be submited simultaneously

The new process class

__init_subclass__()

Do the requirements inferring since we need them to build up theprocess relationship

init()

Init all other properties and jobs

gc()

GC process for the process to save memory after it's done

log(level, msg, *args, logger=<LoggerAdapter pipen.core (WARNING)>)

Log message for the process

level(int | str) — The log level of the recordmsg(str) — The message to log*args— The arguments to format the messagelogger(LoggerAdapter, optional) — The logging logger

run()

Run the process